Hi everyone! Recently I was thinking a lot about the way HEEX renders lists. People are generally surprised about huge payloads being send on any list change, and I had to work around this in one of my recent projects to keep site responsive.

I think there’s a possible payload optimisation inspired by client-side VDOM implementations. Let me explain by providing a very simple phoenix playground rendering a list.

Mix.install([{:phoenix_playground, "~> 0.1.3"}])

defmodule DemoLive do

use Phoenix.LiveView

def render(assigns) do

~H"""

<button phx-click="add">add</button>

<ul>

<li :for={i <- @items}>

<%= i.name %>

</li>

</ul>

"""

end

def mount(_params, _session, socket) do

{:ok, assign(socket, :items, [])}

end

def handle_event("add", _params, socket) do

items = socket.assigns.items

id = length(items)

new_item = %{id: id + 1, name: "New#{id + 1}"}

{:noreply, assign(socket, :items, [new_item | items])}

end

end

PhoenixPlayground.start(live: DemoLive)

The problem

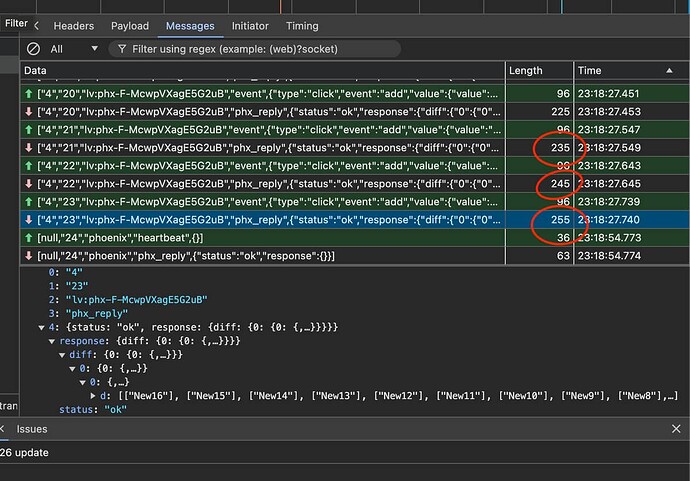

LiveView has many exciting optimizations, but they mostly doesn’t apply if you’re using a “:for” loop. In my basic example, on each list update LiveView sends all the elements again, even if most of them are exactly the same as before.

In this case, problem could be solved trivially by using Streams. But in many other cases it’s not feasible because:

- Streams works only as top-level assigns. If you have list as an attribute of an object (eg. ecto associations like

current_user.posts) then using streams is not easy. - Streams API is different. You need to understand how exactly list changes. It might complicate your business layer (contexts) just to get that information.

- Streams gives you both memory optimization and payload optimization. I believe second one should be available out of the box, even without using streams.

Idea

This problem is not new. Multiple frontend frameworks use Virtual DOM, where they need to calculate a minimal patch between VDOM and DOM. They simply require :key attribute when rendering a list, for example Vue.js

Then, it’s rather trivial to figure out:

- if element is new (key not present in the old state, but present in the new one)

- if element was removed (key present in the old state, but no longer in the new one)

- if element was updated (key present both at old and new state, then we should calculate diff recursively for these elements)

So, maybe LiveView could go the same route? If we could introduce :key as an optional attribute, HEEX engine could calculate:

- added elements (and their positions)

- removed elements

- updated elements (and their positions)

and send an efficient, minimal payload to the client. This actually could even enable efficient diffs for components nested below :for loops.

An example (notice added :key):

def render(assigns) do

~H"""

<ul>

<li :for={i <- @items} :key={i.id}>

<%= i.name %>

</li>

</ul>

"""

end

Implications

- This would require to keep previous assign of a list around in

assigns.__changed__, which is currently not the case. - This would impose a small overhead of calculating old keys, new keys and figuring what was updated, but only when

:keyis present. I think it’s worth to do it and send a smaller payload than to send everything. - Reordering might require some thoughts

- I’m not sure if it would be a breaking change, possibly not?

All in all, I believe that optimization should be possible to accomplish. I might give it a shot myself, just wanted to first ask community for some feedback ![]() What’s your opinion?

What’s your opinion?

EDIT: I wasn’t aware it’s a category only for registered users, could someone move it to phoenix questions or some other public place? ![]() Would like to refer this in github PR / issue if I’ll be able to sit down and tackle it.

Would like to refer this in github PR / issue if I’ll be able to sit down and tackle it.