Any insight would be appreciated here…

I’m getting this message in my logs and can’t quite figure it out:

Postgrex.Protocol (#PID<___>) disconnected: ** (DBConnection.ConnectionError) client #PID<___> exited

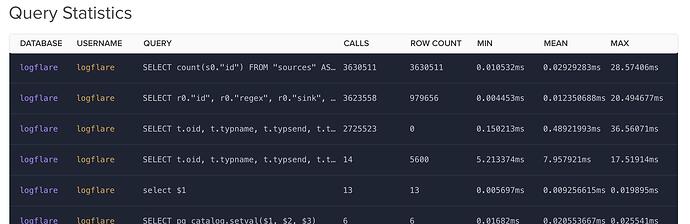

I’ve been working on a centralized logging service https://logflare.app. It’s really easy to start using if you’re on Cloudflare because we have a Cloudflare app. Recently been getting some larger sites signing up.

This weekend we got another big one playing with it. CPU was high and ram started climbing. Looking at the logs I see that message.

At that point everything was on a $40 a month Digital Ocean box (which is awesome because we are handling a lot of requests, sometime sites are sending upwards of 1000 requests a second). No downtime at all and no bad response codes. Ecto seems to just be reissuing the query or something if it exits.

My first try at alleviating this was to move Postgres to it’s own instance. I did that … still seeing errors. Doesn’t seem to be Postgres struggling. Upped the Ecto pool count, and enabled pooling on Postgres. No difference there.

Then I started caching API keys in ETS. Which is also awesome because it was super easy. A little lighter load on Postgres but still I get those errors in the logs.

Not quite sure where to go from here. The way I see it I can:

a) Cache more stuff in ETS.

b) Upgrade the box.

c) Remove Nginx as maybe there is some contention there (currently limiting Nginx to 2 workers and it’s a 4 core box).

Although I’m still kind of just throwing darts here. Is something in my code killing the client? Is whatever making that call finishing before the query returns and causing the exit?

I need to figure out how to run observer remotely. Maybe that will shed some light on things. I’m assuming the ram issue is logger filling up with error messages.

Another fun side effect is that mix edeliver restart production just hangs. No idea why that is either.

Code is all up at: https://github.com/Logflare/logflare