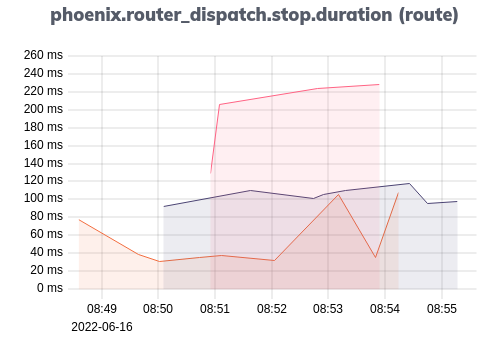

I upgraded LiveView from 0.15.4 → 0.17.7 in March. I recently noticed that the duration has doubled from 35 to 70 ms since March which is quite confusing to me.

However, this could be caused by a few things:

- upgrading from 0.15.4 → 0.17.7

- a server change, however, it was moved to a beefier server

- preparing for localization and adding a great deal of gettext usage

I attempted to profile in several ways, but can’t seem to instrument the right place.

-

incendium uses eflame which uses :erlang.trace. Using master so that it works on newer phoenix, I added the decorator in various places:

- controller - works as expected. Elixir.Phoenix.Controller:render_and_send, Elixir.Plug.Conn:send_resp

- liveview mount - only shows the function

- liveview handle_params - only shows the function

- render - does not work - gives only :eflame.stop_trace, :eflame_trace, :erts_internal_trace

- router scope - does not work

- in app/lib/app_web.ex - decorating controller or view doesn’t work.

- I tried Profiler which uses :fprof under the hood and couldn’t get a useful trace either.

Anyone have ideas?