In my journey of playing with Ports, OpenCV python wrapper and real-time object detection, I’ve finally ended up playing (for the first time) with Scenic… what a joy (thanks @boydm!) ![]() !!

!!

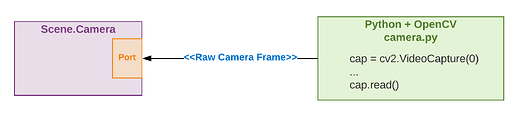

What I’m trying to do is to render raw camera frames on a window with Scenic (then I’ll render object detection labels and bounding boxes). At the moment I’m using a Python script with OpenCV, which reads the frames from the camera, coverts the numpy array to a binary with 3 uint8 bytes (rgb) per pixel and pushes the frames to Elixir via port.

The example below works well, but since I’ve just started with Scenic, I’m just wondering if there is a better/easier pattern, maybe a ready to use library that reads camera frames !?!?

What impressed me is that the result with Scenic + Port is far smoother than rendering the frames using OpenCV with cv2.imshow("Frame", arr) on Python! For what I see there is almost no perceptible delay.

I’ll try to post a quick video showing the comparison.

#camera.py

import os, sys

from struct import unpack, pack

import cv2

import time

def setup_io():

return os.fdopen(3,"rb"), os.fdopen(4,"wb")

def write_frame(output, message):

header = pack("!I", len(message))

output.write(header)

output.write(message)

output.flush()

def open_camera(source=0):

cap = cv2.VideoCapture(source)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1280)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 720)

return cap

def get_and_write_frame(cap, output_f):

start = time.time()

_, arr = cap.read()

stop = time.time()

arr = cv2.cvtColor(arr, cv2.COLOR_BGR2RGB)

data = arr.tobytes()

write_frame(output_f, data)

def run():

input_f, output_f = setup_io()

cap = open_camera(0)

while True:

get_and_write_frame(cap, output_f)

run()

Camera Scene on the Elixir side

defmodule Cv.Scene.Camera do

use Scenic.Scene

require Logger

alias Scenic.Graph

alias Scenic.ViewPort

import Scenic.Primitives

def init(_, opts) do

{:ok, %ViewPort.Status{size: {width, height}}} = ViewPort.info(opts[:viewport])

scenic_ver = Application.spec(:scenic, :vsn) |> to_string()

glfw_ver = Application.spec(:scenic, :vsn) |> to_string()

graph =

Graph.build(font: :roboto)

|> rect({1280, 720}, id: :white, fill: {:dynamic, "camera_frame"})

_port = Port.open({:spawn, "python camera.py"}, [:binary, {:packet, 4}, :nouse_stdio])

{:ok, graph, push: graph}

end

def handle_info({_port, {:data, raw_frame}}, graph) do

Elixir.Scenic.Cache.Dynamic.Texture.put("camera_frame", {:rgb, 1280, 720, raw_frame, []})

{:noreply, graph}

end

end

On the scenic side there is no need of decoding, handle_info receives the raw frame bytes that can be directly put in the camera_frame dynamic texture (I still have to better understand how textures work in Scenic…)

At the beginning I was concerned about performance, thinking that port could be a bottleneck, but hopefully I was wrong! A 720p (1280x720) RGB raw frame is ~3mb and I measured that a round-trip-time of this message via ports is ~0.6ms - the maximum throughput I could get with a port is ~1.7Gbyte/s on a macbook pro.

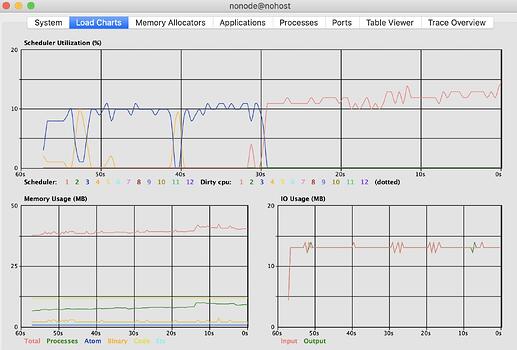

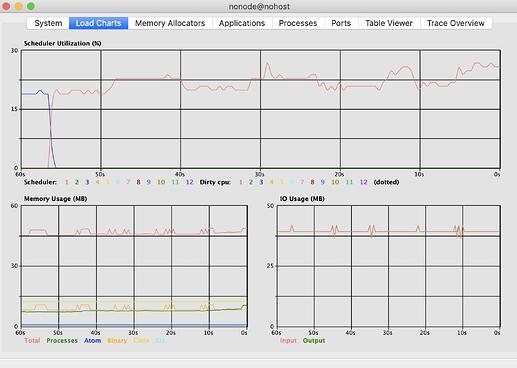

Some snapshots of the observers!

640x480 frames

1280x720 frames

saying

saying  and drinking

and drinking  ) trying to show the difference between the two

) trying to show the difference between the two