Our main app is a Rails monolith, accompanied by Phoenix app for the websockets channels.

Both Rails and Phoenix are behind nginx, nginx has a separate server, rails app runs on 3 servers and phoenix on one server, all inside DigitalOcean datacenter.

This works fine for us and we plan to continue building new features using these blocks.

Now you have some context and here goes the problem itself:

I keep seeing upstream timed out (110: Connection timed out) while connecting to upstream error in nginx error.log (and 504 in access.log).

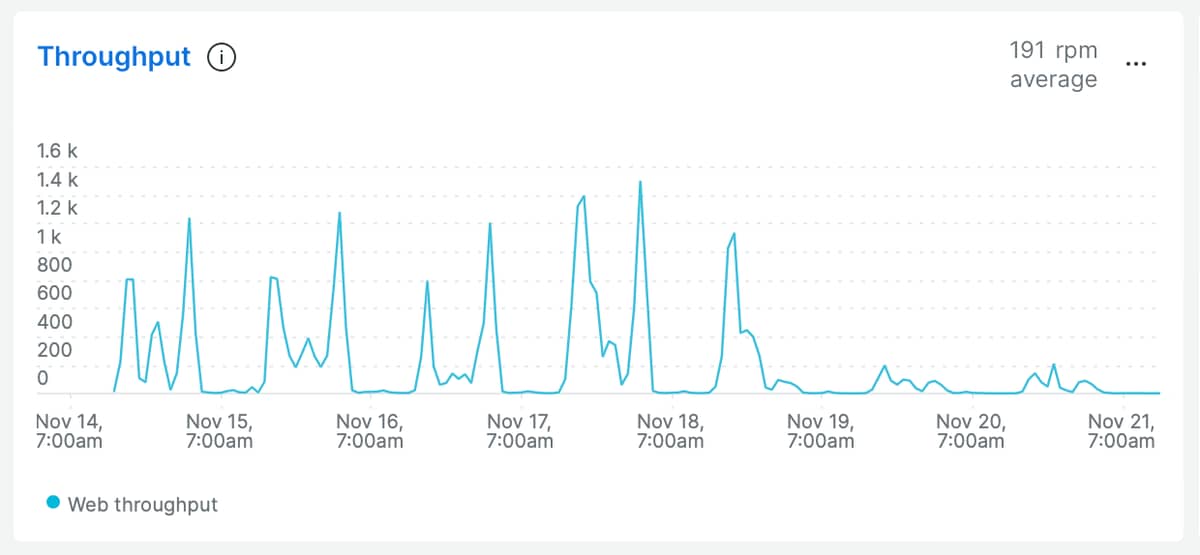

It is rather infrequent, say 0.01% or maybe even less (for quieter day like last Sunday it is actually 0.005% (3 requests out of ~60K), but I’m really annoyed by this error and want to get to the bottom of this.

Connection timed out means that nginx spent 60s waiting for the phoenix app to respond, this happens both with WSS connections, LP fallback and even API calls

This is the controller code:

def stream_delay(conn, %{"time" => time}) do

current = System.system_time(:millisecond)

delay = ((current - String.to_integer(time)) / 1000) |> round

json(conn, %{delay: delay})

end

This is the pipeline:

pipeline :public_api do

if Application.get_env(:phoenix_app, :set_cors) do

plug CORSPlug, origin: ["http://localhost:3000", "http://localhost:4000"]

end

plug :accepts, ["json"]

end

I’m posting this to show that this code has no external dependencies.

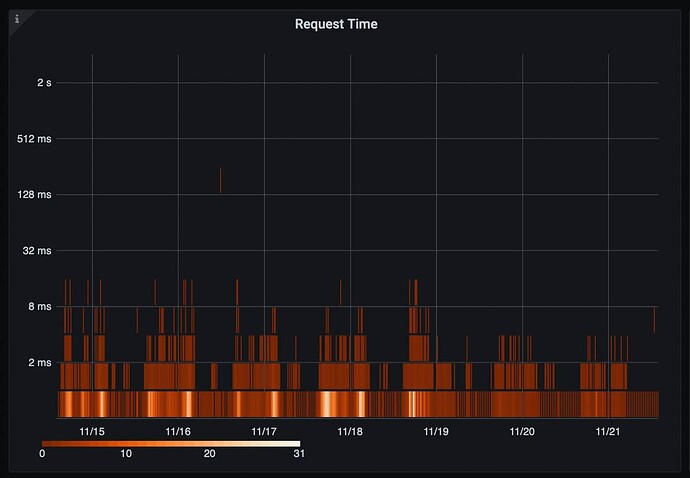

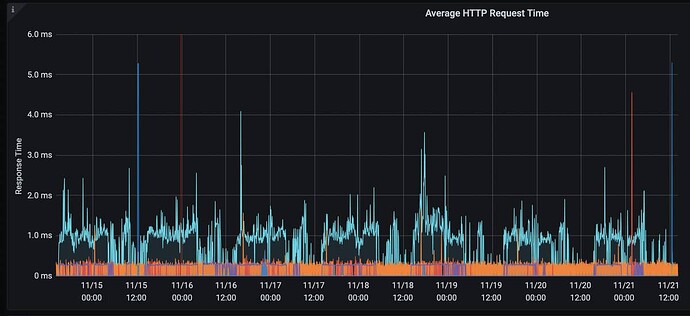

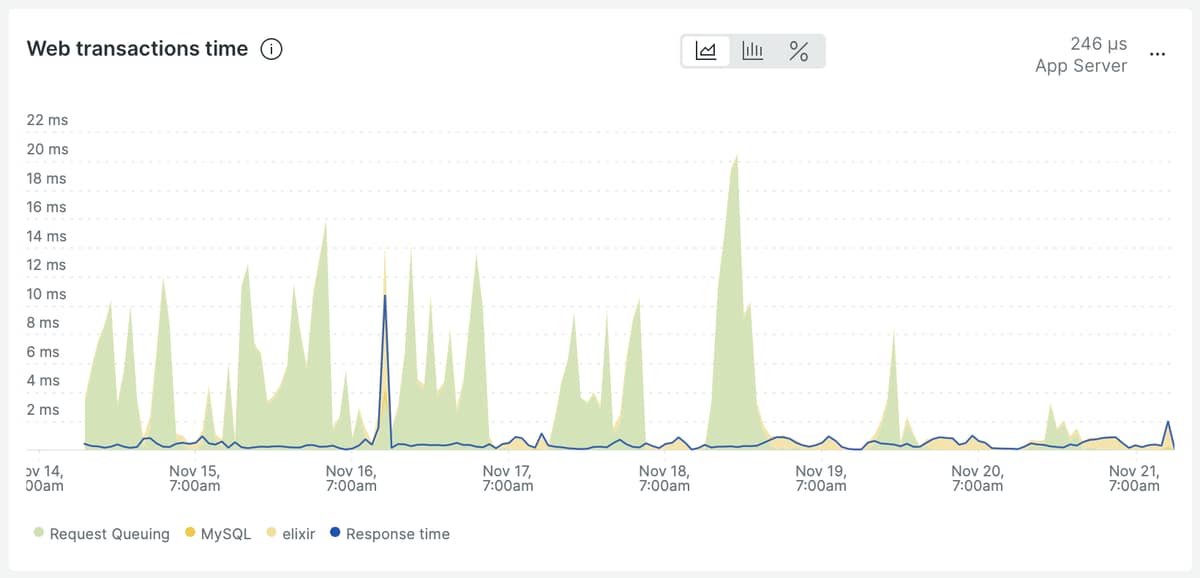

The response time according to 2 different monitoring tools (NewRelic and prom_ex phoenix exporter) is less than 1ms.

Also, according to node_exporter graphs, CPU (and this endpoint could be slow only due to CPU) is at ~5-10% (server has 8 cores / 32GB RAM)

And another thing – we use X-Request-Start header to observe the delay the requests spend in the queue before they can be processed by Phoenix, and I keep seeing some very strange numbers there, too: for the Rails app it is usually within 1-3ms (jumping up to 5ms-10ms sometimes), for the Phoenix app it can anywhere from 1ms to 30-50-100ms. That makes zero sense to me.

My initial nginx config was something like this:

location /phoenix/api { deny all; }

location /phoenix/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Request-Start "t=${msec}";

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_redirect off;

proxy_http_version 1.1;

proxy_pass http://phoenix/;

}

I thought that these two guys

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

could be an issue for the usual API calls, so I’ve separated it into 2 locations:

location /phoenix/socket/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Request-Start "t=${msec}";

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_redirect off;

proxy_http_version 1.1;

proxy_pass http://phoenix/socket/;

break;

}

location /phoenix/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Request-Start "t=${msec}";

proxy_redirect off;

proxy_pass http://phoenix-api/;

}

Upstream looks like this:

upstream phoenix {

server {{phoenix_app_address}}:4000;

}

upstream phoenix-api {

server {{phoenix_app_address}}:4000;

keepalive 5;

}

I added keepalive thinking that the only issue that could be at play here is a network subsystem and this can be improved by reducing the number socket connections made between nginx and phoenix, but I don’t see any improvements.

Speaking of sockets: both servers are nowhere near their open sockets limit, nginx sometimes has around 20K open sockets, phoenix server is usually 1/3 of that number, so it’s 6-7K.

Open file limit is 32K per nginx worker (we run 2 workers on 2 cores), for phoenix it is 65K.

So my question is: how would you approach investigating this further? My experience with Rails tells me that queueing time and timeouts are caused by app being slow, but I see zero evidence that phoenix app is slow (both NewRelic and prom_ex tell me that 99% of requests are within 1-10ms range), so I’m trying to investigate the OS limits / perf, but again with zero success.

some graphs

PS

please, don’t suggest using phoenix without nginx, I’m aware about this option, I’m more interested in finding out what’s going on here rather than moving things around hoping it will help.

EDIT

After reading it again, I think that I can set proxy_connect_timeout and proxy_read_timeout to 1s (or 5s). This is way above what I would expect to see in production anyways, but it could give a clue if it is a very rare thing to happen, or there are many requests that spend say 3-5s waiting to connect to phoenix upstream.

Though if this number is reflected in the Queueing time in NR I think it would be much higher than 20-30ms if it was happening more often. But after all, I’m not even sure if these two (504 and queue time) are related or not.