Hello!

I am building a pipeline to process, aggregate and transform data from csv files, then write back to another csv file… I load rows from a 19 column csv file, and with some mathematical operations (map reduce style) write back 30 columns in another csv.

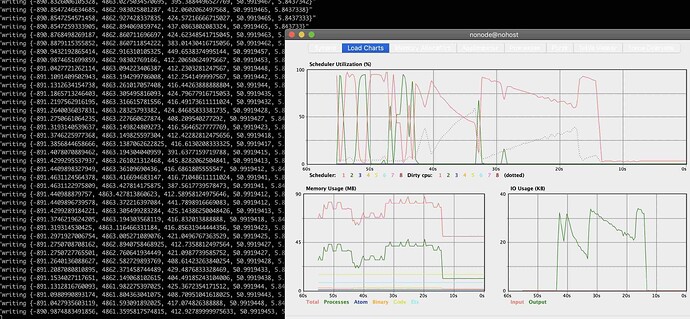

And it was going fine until I tried to upload a 25mb file to the application, 250000 rows, and then I decided to stream all the operations instead of eagerly processing… but now that I’m changing function by function with streams, I faced a problem that I don’t understand why, after only 5 fields created, when I try to write to file the program just freezes and stops writing after a few thousand lines.

I’m streaming every single function so it shouldn’t have any locks as far as I understand, and for the first thousands writes it works fine so I wonder what’s happening, in the erlang observer I can only see usage of resources dropped to near 0 and it doesn’t write to the file anymore.

This is my stream function (before I just load from file), and next is my write function:

def process(stream, field_longs_lats, team_settings) do

main_stream =

stream

# Removing once that don't have timestamp

|> Stream.filter(fn [time | _tl] -> time != "-" end)

# Filter all duplicated rows by timestamp

|> Stream.uniq_by(fn [time | _tl] -> time end)

|> Stream.map(&Transform.apply_row_tranformations/1)

cumulative_milli =

main_stream

|> Stream.map(fn [_time, milli | _tl] -> milli end)

|> Statistics.cumulative_sum()

speeds =

main_stream

|> Stream.map(fn [_time, _milli, _lat, _long, pace | _tl] ->

pace

end)

|> Stream.map(&Statistics.get_speed/1)

cals = Motion.calories_per_timestep(cumulative_milli, cumulative_milli)

long_stream =

main_stream

|> Stream.map(fn [_time, _milli, lat | _tl] -> lat end)

lat_stream =

main_stream

|> Stream.map(fn [_time, _milli, _lat, long | _tl] -> long end)

x_y_tuples =

RelativeCoordinates.relative_coordinates(long_stream, lat_stream, field_longs_lats)

x = Stream.map(x_y_tuples, fn {x, _y} -> x end)

y = Stream.map(x_y_tuples, fn {_x, y} -> y end)

[x, y, cals, long_stream, lat_stream]

end

write:

def write_to_file(keyword_list, file_name) do

file = File.open!(file_name, [:write, :utf8])

IO.write(file, V4.empty_v4_headers() <> "\n")

keyword_list

|> Stream.zip()

|> Stream.each(&write_tuple_row(&1, file))

|> Stream.run()

File.close(file)

end

@spec write_tuple_row(tuple(), pid()) :: :ok

def write_tuple_row(tuple, file) do

IO.inspect("writing #{inspect(tuple)}")

row_content =

Tuple.to_list(tuple)

|> Enum.map_join(",", fn value -> Transformations.to_string(value) end)

IO.write(file, row_content <> "\n")

end