I understand the concept of sleep or delay are for good reasons frown upon by the Erlang community. Process.send_after/4 is an excellent alternative in most cases and allows for maximum 1ms precision (so does the underlying Erlang erlang:send_after call). Yet, 1ms is an eternity for my application.

I am writing time-aware code where events must happen NOT before some point in time. The precise delay amount is not crucial, it just needs to be roughly consistent and in the range of tens of microseconds at the most. The solution should also scale easily to 10k+ concurrent timers at the beginning.

There are a few options (that come to my mind) to achieve sub-millisecond timing:

- The naive one would be a dirty nif & scheduler in combination with POSIX nanosleep. There is two issues with this approach. No form of sleep is scalable. When context switch happens, there is roughly 30 microsecond lag completely defeating the nano part of nanosleep.

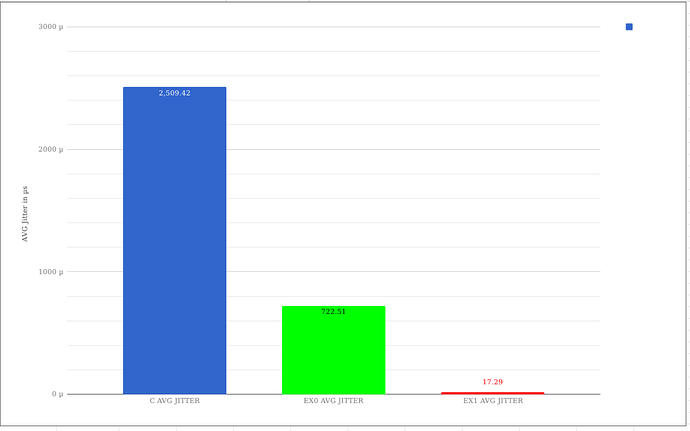

- Using standard nif and POSIX set_time. The nif is only called once to set up the timer. The Elixir process that started the nif starts receiving messages in consistent time intervals (either from a SIGEV_SIGNAL signal handler or another pthread within the nif). With 50 microsecond delay, however, this amounts to roughly 4M reductions on the timer process.

- To implement a native

send_after_microsecondsonly this time utilizing a ring buffer. At this point, this is the solution I am the most inclined towards as it would not spam nearly as many messages. - Introduce a yet another type of scheduler to Erlang dedicated to time critical operations.

Has anyone faced a similar problem? Any hints as in efficiency or further options would be highly appreciated! Below is the preliminary code for option 2.

Cheers,

Martin

defmodule Clock do

use GenServer

require Logger

@on_load :load_nifs

def load_nifs() do

:ok = :erlang.load_nif('priv/c/clock', 0)

end

def start_link(_arg) do

GenServer.start_link(__MODULE__, :ok, name: Clock)

end

def send_after(pid, term, ticks) do

GenServer.cast(Clock, {:send, pid, term, ticks})

end

def get_time() do

GenServer.call(Clock, :get_time)

end

def init(_arg) do

Logger.debug("starting clock")

Process.flag(:priority, :high)

send_every(:tick, 50)

{:ok, {0, []}}

end

def handle_info(:tick, {tick, []}) do

{:noreply, {tick + 1, []}}

end

def handle_info(:tick, {tick, [head | tail]}) do

Enum.each(head, fn {pid, term} -> send(pid, {tick, term}) end)

{:noreply, {tick + 1, tail}}

end

def handle_cast({:send, pid, term, ticks}, {tick, buffer}) do

new_buffer =

case length(buffer) - ticks do

-1 ->

buffer ++ [[{pid, term}]]

rem when rem < 0 ->

buffer ++ List.duplicate([], -1 - rem) ++ [[{pid, term}]]

_ ->

List.update_at(buffer, ticks, &(&1 ++ [{pid, term}]))

end

{:noreply, {tick, new_buffer}}

end

def handle_call(:get_time, _from, {tick, _} = status) do

{:reply, tick, status}

end

defp send_every(_term, _micros) do

raise "clock NIF library not loaded"

end

end