I have too much work right now, it’s not possible. If @dsissitka can make one, that’s great!

I was curious to see if replacing what they have now…

q = try do

case String.to_integer(params["queries"]) do

x when x < 1 -> 1

x when x > 500 -> 500

x -> x

end

rescue

ArgumentError -> 1

end

…with something like…

q = case Integer.parse(params["queries"]) do

{x, _} when x < 1 -> 1

{x, _} when x > 500 -> 500

{x, _} -> x

:error -> 1

end

…would have a significant effect on performance.

In short, their code (~677 RPS) was about 2% slower than my code (~691 RPS) and my code was about 0.6% slower than hard-coding q (~695 RPS). With differences that small I’m not sure how much of it was the code and how much of it was the testing environment.

Unfortunately we missed the deadline for round 13 pull requests.

I must be doing something wrong. When testing on my local machine Phoenix destroys Node.

Tons of reasons for that. Keep in mind, the Phoenix code is not super optimised and will probably evolve for the next round.

Still some interesting things to see :

- Second on cloud Data updates… not bad for a first entry

- Latency in general is low and really consistent. That is really nice, it means that the latency is quite deterministic in prod.

- Not so bad results at all, especially for a first round.

Idea to boost results : As most of these test need Json serialisation, it may make sense to use :jiffy instead of Poison for Json serialisation. It is not that surprising to see it in production when you only deal with JSON answer or input and you want to get more power from your hardware.

Submit PR’s to them to bring Phoenix up to spec? ![]()

Someone should definitely send a PR for that as I believe Poison is the one taking most time on the JSON results.

Probably some of the Phoenix code there could be improved… but…

From the perspective of a Ruby on Rails guy like me, that Phoenix performance is stunning  considering that it is a full stack web framework.

considering that it is a full stack web framework.

Are you talking about the JSON serialization test or all of the tests that return a JSON response?

I tried updating the data update and multiple query tests and it didn’t make a difference. I think that might be because we’re getting 1,915 and 1,945 requests per second there and Poison doesn’t have any trouble keeping up:

def test do

data = for _ <- 1..20 do

%{id: :rand.uniform(10_000), random_number: :rand.uniform(10_000)}

end

Benchee.run(%{time: 10}, %{

"jiffy" => fn -> :jiffy.encode(data) end,

"poison" => fn -> Poison.encode!(data) end

})

end

Erlang/OTP 19 [erts-8.1] [source-e7be63d] [64-bit] [smp:4:4] [async-threads:10] [hipe] [kernel-poll:false]

Compiling 1 file (.ex)

Interactive Elixir (1.3.3) - press Ctrl+C to exit (type h() ENTER for help)

iex(1)> Jsontest.test

Erlang/OTP 19 [erts-8.1] [source-e7be63d] [64-bit] [smp:4:4] [async-threads:10] [hipe] [kernel-poll:false]

Elixir 1.3.3

Benchmark suite executing with the following configuration:

warmup: 2.0s

time: 10.0s

parallel: 1

Estimated total run time: 24.0s

Benchmarking jiffy...

Benchmarking poison...

Name ips average deviation median

jiffy 38.51 K 25.97 μs ±81.62% 20.00 μs

poison 21.35 K 46.84 μs ±57.78% 37.00 μs

Comparison:

jiffy 38.51 K

poison 21.35 K - 1.80x slower

I tried updating the JSON serialization test to use jiffy but unfortunately my development environment can only manage 11,000 requests per second. ![]()

exactly… what throws me off with the techempower benchmarks is them apparently classifying it as “Micro” framework, does anyone know what that’s about?

I think you can change that via PR as well

I saw this other JSON library in the Elixir Weekly that claims to be faster than poison as well: https://github.com/zackehh/tiny

Oooh, let’s see. Same test as before:

Erlang/OTP 19 [erts-8.1] [source-e7be63d] [64-bit] [smp:4:4] [async-threads:10] [hipe] [kernel-poll:false]

Elixir 1.3.4

Benchmark suite executing with the following configuration:

warmup: 2.0s

time: 10.0s

parallel: 1

inputs: none specified

Estimated total run time: 36.0s

Benchmarking jiffy...

Benchmarking poison...

Benchmarking tiny...

Name ips average deviation median

jiffy 39.40 K 25.38 μs ±81.69% 20.00 μs

tiny 24.06 K 41.55 μs ±65.97% 32.00 μs

poison 19.16 K 52.19 μs ±53.85% 42.00 μs

Comparison:

jiffy 39.40 K

tiny 24.06 K - 1.64x slower

poison 19.16 K - 2.06x slower

For what it’s worth, my PR that classifies Phoenix as full stack (and compares it to cowboy) was merged yesterday. So at least at the next round we can filter the noise and have meaningful comparisons.

I have also started some work on “optimizing” phoenix here:

By optimizing I mean just fixing some default values. I have no idea what I’m doing so I would appreciate experienced phoenix devs to have a look.

I haven’t tried to actually benchmark things to see if my optimizations make any difference. I do plan to write some tests though to ensure I don’t break anything.

Is this already supported in Phoenix? I know that you can swap out the json_decoder in plug but I’m not sure if something similar exists in phoenix for encoding.

Nevermind. Answered my own question: https://hexdocs.pm/phoenix/Phoenix.Template.html#content

Sorry just found @thousandsofthem already post the result above.

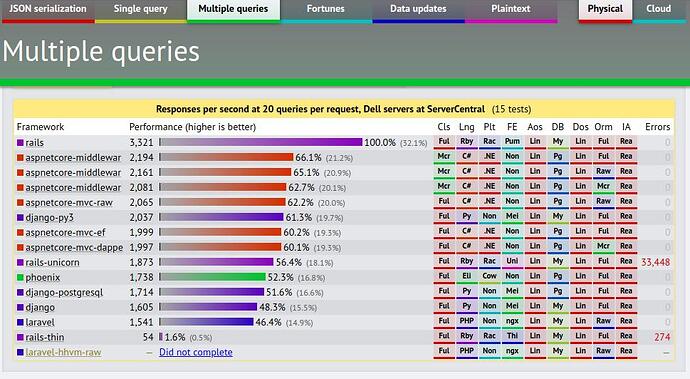

Here is their round 13 result:

I previous use Play framework, Servlet, I don’t think they can even match Phoenix.

Disclaimer: See many previous discussions about being wary of benchmarks and be more-so wary about benchmarks of Elixir since they’re almost never representative of real world code. Assorted other benchmarks like Which Framework is Fastest or A Tale of 3 Kings provide other view into things are good for perspective.

That said…the Round 15 preview is out. It’s based on the code that existed back in Nov, but overall things are about the same. Source code for the Elixir tests is here.

I know we had some folks talking about doing PR’s in the Round 14 thread.

It would be nice to see the results with the faster Jason JSON parser too. I saw another thread discussing HiPE and the Jason docs even mention that it outperforms Jiffy when compiled with HiPE. I’ve never touched HiPE but what I saw seemed to indicate that if all of the code wasn’t HiPE compatible that there would be high switching costs. Would it make sense to turn on HiPE here and how can we identify if there would be switching costs?

I know they aren’t representative, but at the least it’s still worth keeping things up to date since they will be looked at.

Just a quick note to say I’ve merged all threads on this as there has been some good discussion/critiques in some of the older threads

“What” makes Rails perform better than all other fullstack frameworks in Multiple Queries on physical servers? Can we port that “what” to Phoenix/Ecto?