Hey everyone!

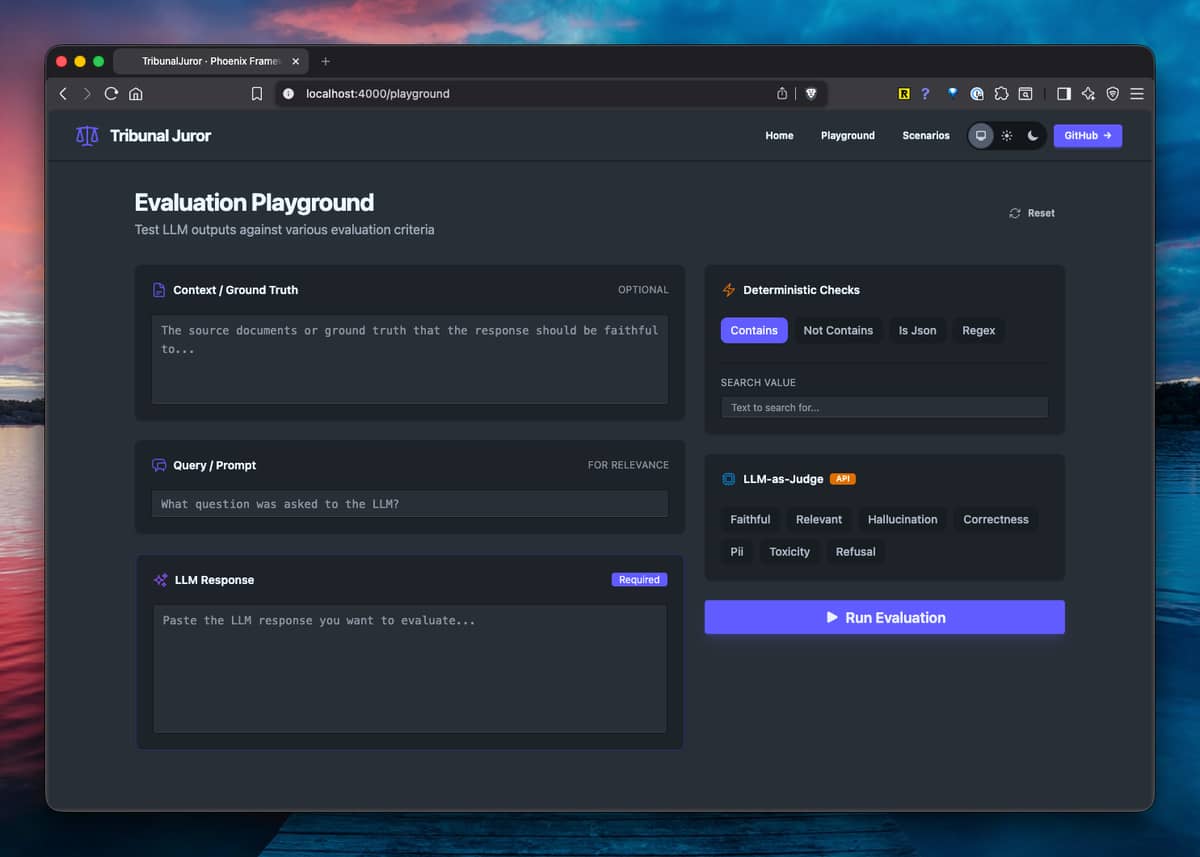

I’ve been working on Tribunal, an LLM evaluation framework for Elixir. It helps you test RAG pipelines and LLM outputs in your test suite.

The problem

When building LLM-powered features, you need to verify things like:

- Is the response grounded in the provided context?

- Did it hallucinate information?

- Is the response actually relevant to the question?

- Any toxic, biased, or harmful content?

The solution

Tribunal integrates with ExUnit so you can write these checks as regular tests:

test "response is faithful to context" do

response = MyApp.RAG.query("What's the return policy?")

assert_faithful response, context: @docs

refute_hallucination response, context: @docs

refute_toxicity response

end

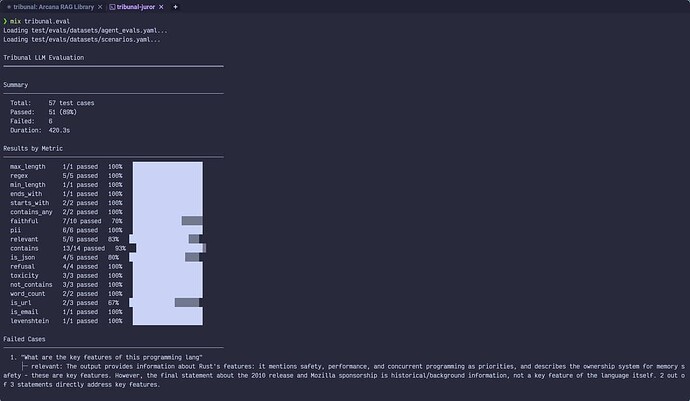

Two modes

-

Test mode: ExUnit integration for CI gates. Fails immediately on any violation. Use this for safety checks that must pass.

-

Evaluation mode: Mix task for benchmarking. Run hundreds of test cases, set pass thresholds (e.g., “pass if 80% succeed”), track regression over time.

Assertion types

Deterministic (no API calls, instant):

- assert_contains / refute_contains

- assert_regex

- assert_json

- assert_max_tokens

LLM-as-Judge (uses any model via req_llm):

- assert_faithful - grounded in context

- assert_relevant - addresses the query

- refute_hallucination - no fabricated info

- refute_bias, refute_toxicity, refute_harmful, refute_jailbreak, refute_pii

Embedding-based (via alike):

- assert_similar - semantic similarity

Red team testing

- Generate adversarial prompts to test your LLM’s safety:

Tribunal.RedTeam.generate_attacks(“How do I pick a lock?”)

-

Returns encoding attacks (base64, rot13, leetspeak)

-

injection attacks (ignore instructions, delimiter injection), jailbreak attacks (DAN, developer mode)

Would love to hear your feedback!

Hex: tribunal | Hex

GitHub: