I was thinking:

What if I could abstract some of my otp applications from in my phoenix app and move them to another server?

I got this idea because I wanted to see if I could not put one of my 3 free 256mb VMs from fly.io to use since I was hitting a out of memory error.

[info][ 41.509215] Out of memory: Killed process 255 (beam.smp) total-vm:1880020kB, anon-rss:141076kB, file-rss:0kB, shmem-rss:69636kB, UID:65534 pgtables:624kB oom_score_adj:0

[error]could not make HTTP request to instance: connection closed before message completed

[info][os_mon] cpu supervisor port (cpu_sup): Erlang has closed

[info][os_mon] memory supervisor port (memsup): Erlang has closed

[info] INFO Main child exited with signal (with signal 'SIGKILL', core dumped? false)

[info] INFO Starting clean up.

[info] WARN hallpass exited, pid: 256, status: signal: 15 (SIGTERM)

[info] INFO Process appears to have been OOM killed!

[info]2023/08/16 00:12:28 listening on .....

[info][ 43.303638] reboot: Restarting system

[info]Out of memory: Killed process

So say I have an app I want to break apart. I’m gonna go with libcluster for this example.

application.ex on server A

def start(_type, _args) do

topologies = [

cluster_example: [

strategy: Cluster.Strategy.Epmd,

config: [

hosts: [

:"app@foo.dev",

:"app@bar.dev"

]

]

]

]

children = [

# Start the Ecto repository

ClusterExample.Repo,

# Start the Telemetry supervisor

ClusterExampleWeb.Telemetry,

# Start a worker by calling: ClusterExample.Worker.start_link(arg)

# {ClusterExample.Worker, arg}

{Cluster.Supervisor, [topologies, [name: ClusterExample.ClusterSupervisor]]}

]

And then you got server B

def start(_type, _args) do

topologies = [

cluster_example: [

strategy: Cluster.Strategy.Epmd,

config: [

hosts: [

:"app@foo.dev",

:"app@bar.dev"

]

]

]

]

children = [

# Start the PubSub system

{Phoenix.PubSub, name: ClusterExample.PubSub},

# Start the Endpoint (http/https)

ClusterExampleWeb.Endpoint,

# Start a worker by calling: ClusterExample.Worker.start_link(arg)

# {ClusterExample.Worker, arg}

{Cluster.Supervisor, [topologies, [name: ClusterExample.ClusterSupervisor]]}

]

Would this work like I assume it would? In that I assume the app will route methods correctly to the given hosted apps?

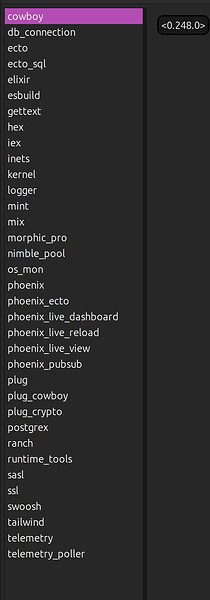

I look at :observer.start() and I assume I should be able to move any of these apps to another server correct?

I guess I’m just not sure what that would look like.