Hi all, I’ve been reading a lot about the “let it crash” term and how supervising processes and the whole messaging passing make an elixir app very stable, but I’m struggling to understand the differences with other languages. What I read is that if a process fails in elixir it just finishes and it’s restarted, without taking down the whole application. But what is the difference to a Java app for example? If I have a site and for example I have a NullPointer Exception or any other exception doesn’t mean that the whole app and the tomcat goes down, that request is dead but if I click back in the browser or go to the URL again the site is still up. So I could just stop handling errors on my java app, it will mean that users will see error messages, but the whole app won’t go down. So what is it different with an elixir app?

Evaluating “let it crash” in a situation that is largely stateless to begin with (http requests) misses somewhat of the origin story this philosophy.

A good place to start would be to read https://ferd.ca/the-zen-of-erlang.html since anything most of us are going to say by way of an introduction to the topic will largely amount to a rephrasing of that post.

Notably, even in your stateless http requests there is a major difference: In Java, a crash due to your request will take out OTHER people’s requests too, since any single Java thread is handling many different requests concurrently, and if it dies due to your error everyone else will also go down. In Elixir / Erlang, crashing your process leaves everyone else’s utterly unaffected.

This makes crashing in Java expensive. It’s expensive in terms of performance because threads are heavy weight, and it’s expensive in terms of the cost to your users, since crashing is not isolated merely to the user who’s state caused the crash. Consequently, design patterns that utilize crashing as a way to ensure state guarantees are not readily available to Java. For more on those design patterns, I recommend the aforementioned link.

Going to read that article now, I’m still confused about the java example though. If I write a web app that takes two numbers from the user in the browser and returns the division, if a user tries to divide by zero, that session will crash and the user will see an error message, but other users in other browsers in different parts of the world won’t be affected by that crash (as far as I know). Maybe this article will clarify a few things.

thanks

If their requests ended up on the same JVM scheduler while your request broke it, everything controlled by that scheduler will brake appart. On servers with small loads, you won’t see a difference since often only one scheduler is busy at all, but on larger ones with a lot of requests per second those situations can happen.

For me in real office life, it is like this:

We have coded a go application for a customer, there is a huge machinery spun around it to restart it when a crash happens and we had to code very carefully to avoid those crashes at all, since restarts are expensive, the application has spin-up time of about 5 seconds.

For the first iteration of the software I coded a feature complete clone in erlang which which was able to handle requests even faster, was easier to read (because there was only a happy path and not 75% of the codebase were to handle errors), the external supervisor was replcaced by my own, BEAM internal. It was even capable of supervising other auxiliary tools on the same machine, where the go program had to rely on a working state (and crashed if they were not running, at least until the very last iteration). The actual working horses of the program were back instantly, global state or config was not affected by a single crashing worker.

I was able to push it even into a second iteration until the customers CTO decided that erlang was to “eighties”, and they prefer to have the program implemented in go.

NB: The go program does rely on RabbitMQ, the erlang program didn’t…

NB2: The go program was developed by a team of 4, the erlang version by me alone in my spare time. So the erlang version could have been production ready much earlier than this go program…

This makes my brain hurt…

Well, his exact words were “we decided to go with modern technologies, so we want the go version”, but I really got his point when he said that…

One thing that has stuck with me is something Joe Armstrong said - one way to highlight the difference between BEAM languages and other languages is that we don’t have web servers that handle 20 million sessions - we have 20 million web servers that handle single sessions ![]()

So when one crashes, it only impacts that single session …and is usually restarted automatically if being supervised - hence it’s not catastrophic to ‘let it crash’.

…should have said, well, go with Elixir ![]()

I tried, but they explained it were the same technology but with a new painting. When I wanted to tell them something about “modern” CPUs and IBM my boss hold me back.

At least the other devs are keeping an eye on the BEAM since then and do not only consider it as “the ugly runtime necessary for rabbit”

I would have referred them to something Joe Armstrong has said (and what I posted not long ago)…

You’d think a CTO would be aware of stuff like this ![]() but it’s ok - horses for courses right…

but it’s ok - horses for courses right…

Well, that decission was made February '17. And to be honest, with the go program we will probably be able to earn more in terms of support fees

I love elixir, erlang and the BEAM in general, but currently I can only use erlang while maintaining exercisms erlang track.

I dislike go, but I learn to live with it. I use it to pay my rent, bread and butter at last… And I think it will stay like this for quite a while, go is much better then the available alternatives at my current working place: JavaScript…

That’s a huge argument! ![]()

I guess they decided to use JS for the same reason right??? ![]()

No, the JavaScript stuff is legacy only. Code we get from the client and need to clean up. Often we try to migrate parts of those js apps to go.

I’m not convinced by the arguments. Maybe there’s a learning opportunity for me or us. Let me know if this makes sense:

I don’t understand JVM scheduler here. I never shipped JVM web apps, so idk if this applies to specific web servers/web frameworks, but I thought JVM threads were OS threads and they were scheduled by the OS itself.

In which frameworks/servers is this true? From my .NET experience, having more than one request per OS thread would only be true on a framework supporting Async IO, that can lift up green threads when they do IO, and let other request green threads run meanwhile. Otherwise I can only visualize one OS thread per request.

Even in Async IO enabled scenarios, where the request green thread is sharing its OS thread with other ones, exceptions still shouldn’t kill the OS thread. The framework has to be able to capture unhandled user exceptions to be able to show error pages, have APMs collect stats and libraries automatically log errors. All this being true, the OS thread dying should be bug in the web framework.

In this case, crashing would be throw new SomeException(), and the cost of that is is collecting the stack. That costs CPU time, which, depending on your performance constraints, is not that relevant.

If all of this is true, the only difference I see is having constructs for failure isolation and recovery, which for us in the BEAM are Processes and Supervisors. Akka, a JVM Actor Model library, also advocates let it crash.

A beginner’s opinion (I need a beginner badge or something, getting tired of mentioning it  )

)

The difference is that the future will bring CPUs with 1024 cores.

The OS will stop being responsible for scheduling and that will be delegated to the application developers.

Languages like Java, Go, PHP, C#, Haskell, and so on will become the equivalent of a man who’s trying to run with 1024 legs attached to the same body, while Elixir/Erlang will be a happy centipede.

Erlang’s VM will handle scheduling for us, while Java, Go, Haskell, etc. developers will have to do that themselves.

Then comes error handling, which in other languages you have to write error handling code for 1024 cores, while Elixir/Erlang devs will slap the “Let it crash” sticker and not go insane.

Feel free to correct me if any of the information is incorrect.

The OS will stop being responsible for scheduling and that will be delegated to the application developers.

What makes you think that? Even the Erlang VM is highly dependent on the OS for scheduling. It has a set of scheduler threads to run processes, but also thread pools for I/O, running dirty NIFs, etc., and it’s up to the OS to manage these.

Erlang’s VM will handle scheduling for us, while Java, Go, Haskell, etc. developers will have to do that themselves.

All the languages you cite have user-level scheduling.

https://morsmachine.dk/go-scheduler

Then comes error handling, which in other languages you have to write error handling code for 1024 cores, while Elixir/Erlang devs will slap the “Let it crash” sticker and not go insane.

I think you’re conflating two things. “Let it crash” helps keep the code simpler by moving the error handling out of the business logic. It’s not harder to handle errors when you have 1024 threads than when you have 2, the code is the same.

To point a few differences:

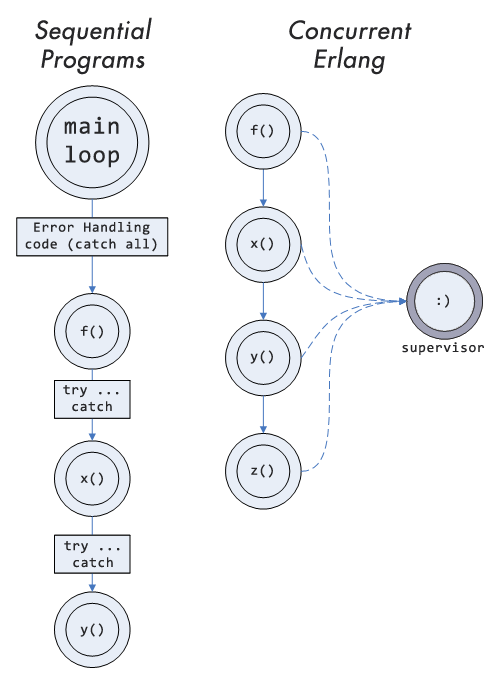

- “Let it crash” helps centralize the error handling in a supervisor process, rather than have it spread around your business logic. This diagram in An Open Letter to the Erlang Beginner (or Onlooker) represents this:

-

All resources are owned by a process in Erlang, and the VM guarantees clean-up of resources once the process dies. In Java, Python, etc., if your response handler opens a file then throws a NullPointer at some random location later, the framework’s exception handler will catch it and the application will seem OK… until it runs out of file handles after this happens too many times. I’ve seen a Twisted app lock up hard because of this. So you have to be more careful with your error handling, and spread try/catch over the business logic.

-

This extends to other types of resources, for instance registered names, DB connections, or locks of all kinds. In most languages, if you hit a problem where threads are grabbing DB connections then throwing an exception without releasing the connection, your app will quickly run out of connections and die. In Erlang the connection (a process) can keep a monitor on the owner (another process), and release itself as soon as the owner dies, no matter the reason. So the robustness of your application depends on the correctness of the DB connection’s code, which is rather small, rather than depending on the correctness of every single request handler that uses a DB connection. In the Erlang world this is called the error kernel, and you want to keep it as small as possible.

What makes you think that the OS will continue to be responsible for 1024 cores and how do you see it working?

Does Quasar and Spark handle scheduling, or the developer handles scheduling?

I’m not sure if you mention user-level scheduling as a benefit on 1024 cores or not. if you mention it as a benefit, could you explain why and what the implementation looks like?

Let it crash, as I understand, means that when things go bad on 1024 cores you let it crash, so that the processes can start with clean state, how does Java handle mutable state on 1024 cores?

Can you explain the benefits of writing mutexes on 1024 cores?

Thanks!

Ouch… So if you really do a process per request, it forks for every single request that comes in? This sounds rather expensive if there is no pooling, which again would mean serialisation of requests at the end…

But as we said again, do not only think about web requests.

Lets think about a connection of my BEAM-application to another server, lets say a database. For some reason the connection is canceled. The managing BEAM process will crash, some centralised crash handler writes debug info in the logs and then the connection is restarted by the supervisor.

Something similar in Java involves either massive rethrow of exceptions to get to the point where we have the centralised crash handler (not even the place where we try to restart). Also some code has to deal with errors that usually can’t pop in there.

But to be honest, the best thing to understand, is to actually use it.

There are a couple of facets to the let-it-crash story.

At the core of it all is the idea of failing fast. This is not something exclusive to Erlang, and I believe it’s generally a good practice. We want to fail as soon as something is off. By doing this, we ensure that the symptom and the cause are one and the same, which simplifies the problem analysis. By looking at the error log, we can tell both, what went wrong, as well as why.

Now, of course, we don’t want our whole system to crash due to a single error, so we need to isolate a failure of a single task. In many of popular languages, this is done by wrapping the task execution in some sort of a catch-all statement, or by running the task in a separate OS process. So for example, as someone mentioned here, a typical web framework will indeed to this to make sure that the error is caught and reported properly.

However try-catch is not a perfect solution due to a couple of things. First, if shared mutable data is used, a task which fails in the middle could have left the data in an inconsistent state, which means that subsequent tasks might trip over.

Moreover, a task itself could spawn additional concurrent subtasks (threads or lightweight threads), and we need to make sure that the failures of these threads are properly caught. A great example of this is go language. If a web req handler spawns another goroutine, and there’s an undeferred panic (aka uncaught exception) in that gouroutine, the entire system crashes.

In contrast, using separate OS processes helps with this, but you can’t really run one OS process per each task (e.g. a request), so we usually group them somehow (which to me is what microservices are about). Now, you need to run multiple OS processes, and you need an extra piece of tech (e.g. systemd) to start these things in a proper order, restart failing OS processes, and maybe take down related OS processes as well.

With BEAM, all of these issues (and some others) are taken care of directly in our primary tech. If you don’t want a failure of one task to crash other tasks, you’ll typically run the task in a separate process, and fail fast there. With errors being isolated, a failing process doesn’t take down anything else with it (unless you ask for it explicitly via links). Shared-nothing concurrency also ensures that a failing thing can’t leave any junk data behind. Moreover, it ensures that whatever crashes, the associated resources (memory, open sockets or file handles) are properly released. Finally, a termination of a process is a detectable event, which allows other processes (e.g. supervisors) to take some corrective measures and help the system heal itself.

As a result, Erlang-style fault-tolerance is IMO a one-size-fits-all. We use the same approach to improve the fault-tolerance of individual small tasks (e.g. request handlers), as well as other background services, or larger parts of our system. I like to think that supervision tree is our service manager (like systemd, upstart, or Windows service manager). It give us same capabilities and same guarantees, it’s highly concurrent, and it’s built into our main language of choice.

In contrast, in most other technologies, you need to use a combination of try/catch together with microservices backed by an external service manager, and in some cases you might need to resort to your own homegrown patterns (e.g. if you need to propagate a failure of one small activity across microservice boundaries). Therefore, I consider these other solutions to be both more complex and less reliable than the Erlang approach.

HTH

Well, responding to the original @rower687 question, I have a tangible example of an advantage of the “let it crash” philosophy: if you take it to extreme levels, you would not put clauses matching all result on your cases anymore.

The argument for this is just what @sasajuric said:

Excerpting it a little bit: exceptions like CaseClauseError are better errors to get than unexpected values escaping out of your own code.

I’ve posted this (with an example) on another topic: