Hello again!

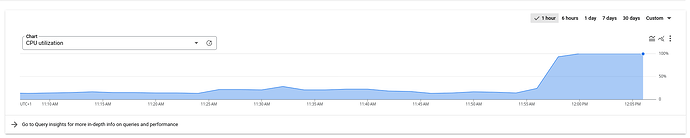

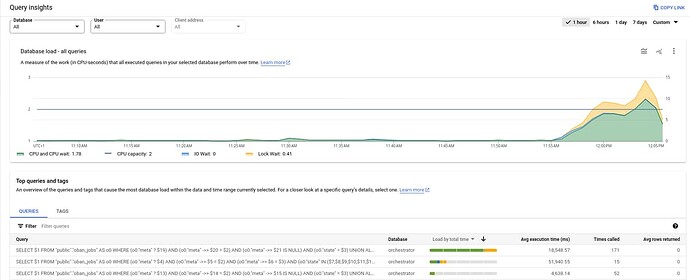

Following yesterday’s topic success we have tried to deploy the new version with the updated code to production but we are running into issues where the database load spikes to 100%. I have a feeling this would not happen if we had shorter pruning time for our jobs table since the same issues does not happen in other environments like our Sandbox environment but would be great to really understand the underlying issue.

For reference, this is the update:

Oban: from 2.17.3 to 2.17.10Oban Pro: from 1.3.0 to 1.4.9Oban Web: from 2.10.2 to 2.10.4

And our database:

- PostgreSQL 13.13

- 2 vcpus

- 7.5GB ram

I have some screenshots and evidence from our Cloud SQL instance that should help to understand the issue, I will include them in details tags so the thread looks nice.

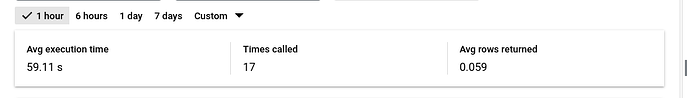

Query 1 and call times

SELECT

$1

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $19)

AND (o0."meta" ->> $20 = $2)

AND (o0."meta" ->> $21 IS NULL)

AND (o0."state" = $3)

UNION ALL (

SELECT

$4

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $22)

AND (o0."meta" ->> $23 = $5)

AND (o0."meta" ->> $24 IS NULL)

AND (o0."state" = $6)

LIMIT

$25)

UNION ALL (

SELECT

$7

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $26)

AND (o0."meta" ->> $27 = $8)

AND (o0."meta" ->> $28 IS NULL)

AND (o0."state" = $9)

LIMIT

$29)

UNION ALL (

SELECT

$10

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $30)

AND (o0."meta" ->> $31 = $11)

AND (o0."meta" ->> $32 IS NULL)

AND (o0."state" = $12)

LIMIT

$33)

UNION ALL (

SELECT

$13

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $34)

AND (o0."meta" ->> $35 = $14)

AND (o0."meta" ->> $36 IS NULL)

AND (o0."state" = $15)

LIMIT

$37)

UNION ALL (

SELECT

$16

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $38)

AND (o0."meta" ->> $39 = $17)

AND (o0."meta" ->> $40 IS NULL)

AND (o0."state" = $18)

LIMIT

$41)

LIMIT

$42

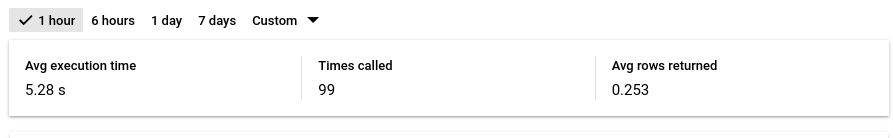

Query 2 and call times

SELECT

$1

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $4)

AND (o0."meta" ->> $5 = $2)

AND (o0."meta" ->> $6 = $3)

AND (o0."state" IN ($7,

$8,

$9,

$10,

$11,

$12,

$13))

Query 3 and call times

SELECT

$1

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $13)

AND (o0."meta" ->> $14 = $2)

AND (o0."meta" ->> $15 IS NULL)

AND (o0."state" = $3)

UNION ALL (

SELECT

$4

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $16)

AND (o0."meta" ->> $17 = $5)

AND (o0."meta" ->> $18 IS NULL)

AND (o0."state" = $6)

LIMIT

$19)

UNION ALL (

SELECT

$7

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $20)

AND (o0."meta" ->> $21 = $8)

AND (o0."meta" ->> $22 IS NULL)

AND (o0."state" = $9)

LIMIT

$23)

UNION ALL (

SELECT

$10

FROM

"public"."oban_jobs" AS o0

WHERE

(o0."meta" ? $24)

AND (o0."meta" ->> $25 = $11)

AND (o0."meta" ->> $26 IS NULL)

AND (o0."state" = $12)

LIMIT

$27)

LIMIT

$28

What our job numbers looks like

Any ideas? Did I miss something in the changelogs that could have caused this?

Thanks for the help and sorry for the fat finger ghost thread I created just before ![]()