I’ve been working on a lib to facilitate the building and deployment of releases. The idea is that it will evolve to something also able to do/support devops work, but the first phase is just to build releases (normally or using a docker flow, and deploy it to one(many) target(s) host. It’s similar in its interface to edeliver but uses elixir (shelling out to cmd locally) and erlang’s ssh module to connect to the remote. Its working fine for this first step and I would like to know if you think this would be useful and what kinds of things you wanted to see on it?

It needs to be init before being used with

mix deployer init

Then the configuration has to be filled:

# Use this file to config the options for the Deployer lib.

import Config

config :targets, [

prod: [

# host to connect to by ssh

host: "host_address",

# user to connect under

user: "user_for_the_host",

# name of the app to be releases

name: "your_app_release_name",

#path where deployer places its configuration and releases on the target host,

path: "~/some/path/on/the/host",

# absolute or relative path to the key to use for ssh`

ssh_key: "~/.ssh/your_key",

# you can apply tags to each release - when you build a release the `:latest` tag is always added to it and removed from any other release with the same name in the store

tags: ["some_tag", "another_one"],

# after deploy the release packet prepared during build is untar'ed on the server, you can execute additional steps after the deploying, It can be ommitted or set to nil

after_mfa: nil # {Module, :function_name, ["arg1", 2, :three]}

]

# ,

# another_target: [ .... ]

]

# config :groups, [

# name_of_group: [:prod, :another_target]

# can_be_many: [:one_host, :another_one]

# ]

config :builders, [

prod: {Deployer.Builder.Docker, :build, []}

]

Then one can run like with edeliver

mix deployer build_and_deploy target=prod

# this would build, in this case a release according to the project definition, in a docker container with a specified Dockerfile (by default one at the root of the project), extract a tar of the release, copy it to the local store and then connect to the host, upload the tar, unpack it, and symlink it. The same in individual steps would be:

mix deployer build target=prod

mix deployer deploy target=prod

# as well as

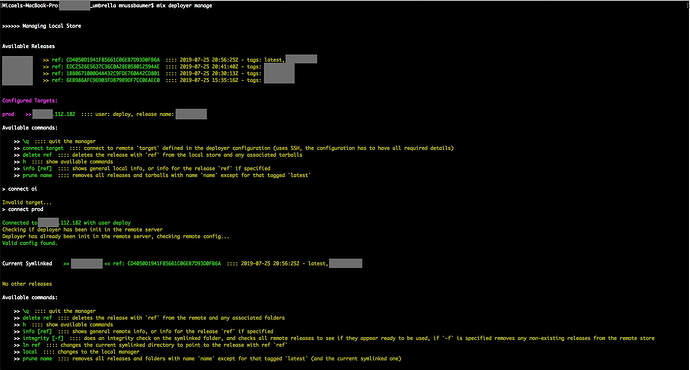

mix deployer manage

mix deployer manage.remote target=prod

Which will be the basis for the “devops” things.

I intend to make each step configurable so flows can be built on top of it and expose some helpers & modules for allowing users to use them in their flows (like the ssh server and upload of files), the build & deploy parts being swappable by any mfa to which the “build”/“deploy” context is passed, before&after hooks etc.

Would this be useful to make as an open source lib? Any suggestions as to what it should be able to do?