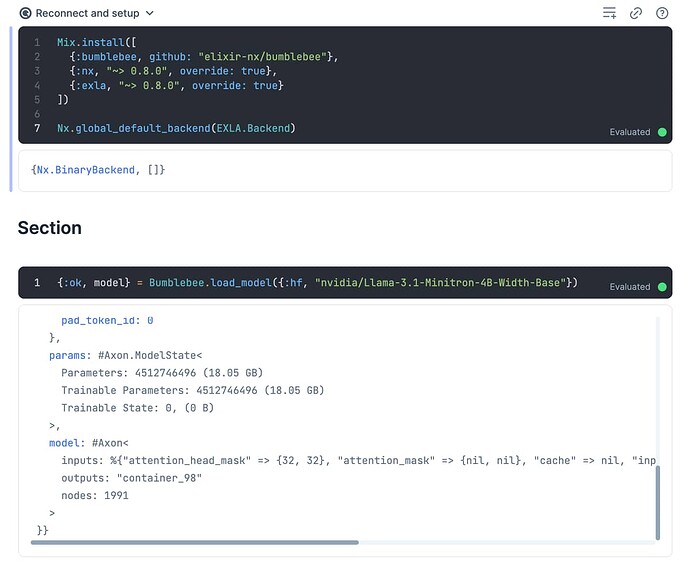

Thanks, not sure If I made progress or not got a new error

** (RuntimeError) conversion failed, invalid format for "rope_scaling", got: %{"long_factor" => [1.0800000429153442, 1.1100000143051147, 1.1399999856948853, 1.340000033378601, 1.5899999141693115, 1.600000023841858, 1.6200000047683716, 2.620000123977661, 3.2300000190734863, 3.2300000190734863, 4.789999961853027, 7.400000095367432, 7.700000286102295, 9.09000015258789, 12.199999809265137, 17.670000076293945, 24.46000099182129, 28.57000160217285, 30.420001983642578, 30.840002059936523, 32.590003967285156, 32.93000411987305, 42.320003509521484, 44.96000289916992, 50.340003967285156, 50.45000457763672, 57.55000305175781, 57.93000411987305, 58.21000289916992, 60.1400032043457, 62.61000442504883, 62.62000274658203, 62.71000289916992, 63.1400032043457, 63.1400032043457, 63.77000427246094, 63.93000411987305, 63.96000289916992, 63.970001220703125, 64.02999877929688, 64.06999969482422, 64.08000183105469, 64.12000274658203, 64.41000366210938, 64.4800033569336, 64.51000213623047, 64.52999877929688, 64.83999633789062], "short_factor" => [1.0, 1.0199999809265137, 1.0299999713897705, 1.0299999713897705, 1.0499999523162842, 1.0499999523162842, 1.0499999523162842, 1.0499999523162842, 1.0499999523162842, 1.0699999332427979, 1.0999999046325684, 1.1099998950958252, 1.1599998474121094, 1.1599998474121094, 1.1699998378753662, 1.2899998426437378, 1.339999794960022, 1.679999828338623, 1.7899998426437378, 1.8199998140335083, 1.8499997854232788, 1.8799997568130493, 1.9099997282028198, 1.9399996995925903, 1.9899996519088745, 2.0199997425079346, 2.0199997425079346, 2.0199997425079346, 2.0199997425079346, 2.0199997425079346, 2.0199997425079346, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0299997329711914, 2.0799996852874756, 2.0899996757507324, 2.189999580383301, 2.2199995517730713, 2.5899994373321533, 2.729999542236328, 2.749999523162842, 2.8399994373321533], "type" => "longrope"}

(bumblebee 0.5.3) lib/bumblebee/shared/converters.ex:20: anonymous fn/3 in Bumblebee.Shared.Converters.convert!/2

(elixir 1.17.2) lib/enum.ex:2531: Enum."-reduce/3-lists^foldl/2-0-"/3

(bumblebee 0.5.3) lib/bumblebee/shared/converters.ex:14: Bumblebee.Shared.Converters.convert!/2

(bumblebee 0.5.3) lib/bumblebee/text/phi3.ex:447: Bumblebee.HuggingFace.Transformers.Config.Bumblebee.Text.Phi3.load/2

(bumblebee 0.5.3) lib/bumblebee.ex:452: Bumblebee.do_load_spec/4

(bumblebee 0.5.3) lib/bumblebee.ex:603: Bumblebee.maybe_load_model_spec/3

(bumblebee 0.5.3) lib/bumblebee.ex:591: Bumblebee.load_model/2

#cell:ihx26ou7pekcazv2:1: (file)

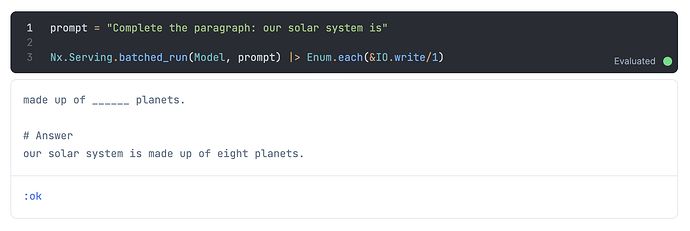

I have a few more queries let me know of I should open another issue or can they be answered here if its in your domain.

I am using livebook in a container and have the /data folder mapped to a volume

- My notebooks are getting saved but the models are downloaded on ever load. Where do models download ? I can map it to avoid redownloading or it is all in memory for now ?

- I have ENV passed to the running container and can see them available in the running container but not available to the livebook. What is needed to make sure the ENV in the container are accessible to the livebook notebooks