In my humble opinion, ‘test-driven’-development only works when you know beforehand exactly what you are going to solve, and how you are going to solve it. In practice, this is of course not the case; a project specification is far from constant.

I have worked with teams in the past that had far too rigid tests, which meant that every feature-change needed to be changed both in the code, and in, say, 20+ tests. As the ‘Why most unit testing is a waste’ paper (which the thread @StefanHoutzager linked to discusses in more detail) said: A test that never fails tells you just as little as a test that always fails.

Also, ‘code coverage’ is about as good an indication of how well-tested your application is, as ‘lines of code per day’ is for developer productivity.

What I do think is important is the mindset behind TDD, which is: Think before you code. In practice, I write most tests after I’ve written my code, but I do believe that tests are important. It is a delicate balance: Too little tests, and your application might perform weird at times you do not expect. Too much tests, and your application becomes hard to maintain. Sola dosis facit venenum.

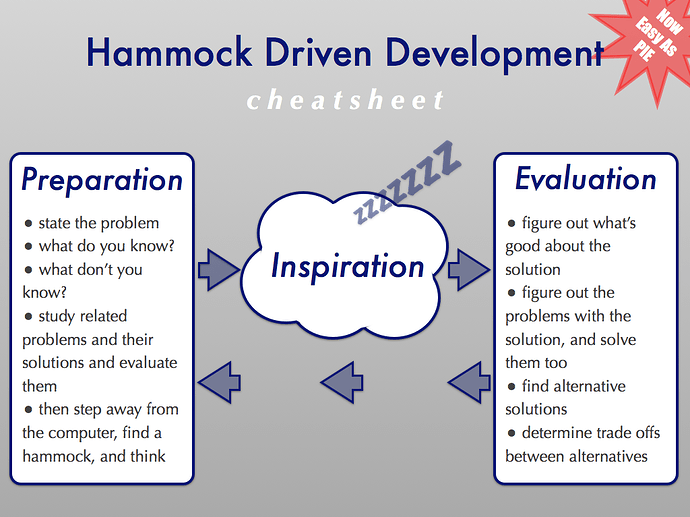

I myself am a great fan of Hammock-Driven Development, because it results in better code even without requiring you to write all tests beforehand (and because hammocks are awesome, of course!):

As for testing in Elixir: I wholeheartedly agree with the statement you quoted above from the Udemy Elixir Bootcamp course. Doctests are great as examples and simple unit/regression tests. When you need to test some edge cases that arise in a more complex context, then these tests can better be written in their own test file.

Another thing: Many people that are new to Elixir think that the “Let it Crash” mentality also means to “Let it burn”. But I think that what we actually usually mean is: We write tests to avoid the simple bugs, but we won’t actively look for the Heisenbugs, because these will find you. It is ridiculously hard to spot a Heisenbug, and instead of wasting too much time on this (and still possibly missing one), it is better to just make you application fault-tolerant: prevent it from burning down even if it crashes.