New to Elixir and I’ve been thinking of a few ways to repeatedly hit a website the fastest way possible while keeping count of the number of requests.

I read this: How can I schedule code to run every few hours in Elixir or Phoenix framework? - Stack Overflow

and also saw it on: GenServer — Elixir v1.17.1

So I did something like this:

defmodule Scraper.Scrape do

use GenServer

def start_link do

GenServer.start_link(__MODULE__, %{:count => 1})

end

def init(state) do

schedule_work() # Schedule work to be performed at some point

{:ok, state}

end

def handle_info(:work, state) do

# Do the work you desire here

IO.puts "HANDLING INFO for #{inspect(self())}"

scrape(state)

schedule_work() # Reschedule once more

{_, state} = Map.get_and_update(state, :count, fn(x) -> {x, x + 1} end)

{:noreply, state}

end

defp schedule_work() do

Process.send_after(self(), :work, 1)

end

defp scrape(state) do

url ="http://www.ebay.com"

count = state[:count]

IO.puts "HTTP get for ##{count} before get for #{inspect(self())}"

response = HTTPoison.get(url, [], [])

case HTTPoison.get(url) do

{:ok, %HTTPoison.Response{status_code: 200, body: body}} ->

IO.puts "SUCCESS"

{:ok, %HTTPoison.Response{status_code: 404}} ->

IO.puts "Not found :("

{:error, %HTTPoison.Error{reason: reason}} ->

IO.puts "HTTPoison.Error = #{inspect(reason)}"

end

end

end

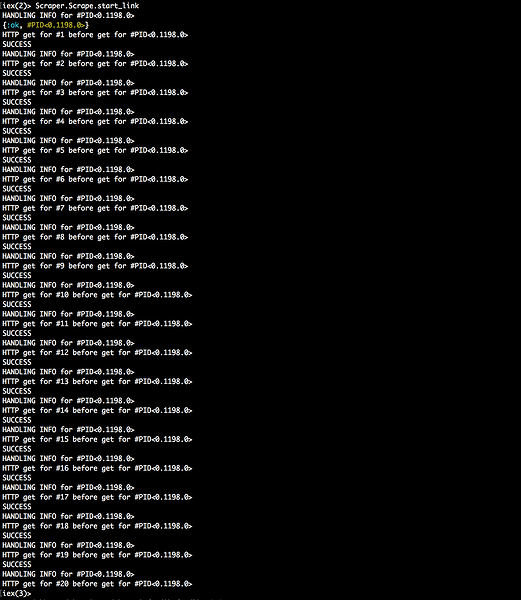

Output:

It’s synchronous now but is there a way to do this asynchronously while repeatedly hitting the same url?

I know I can start multiple processes of this GenServer by calling:

{_, pid1} = Scraper.Scrape.start_link

{_, pid2} = Scraper.Scrape.start_link

I’ve been thinking about how to use Task.async after this after reading the links below but haven’t thought up of a good solution.

[EATBenchmark, an Elixir Project]

(EATBenchmark, an Elixir Project)

http://michal.muskala.eu/2015/08/06/parallel-downloads-in-elixir.html

Any help?

Thx!