Just that you’re using Enum doesn’t mean the whole collection is loaded into memory. Let’s take a simple example:

iex(1)> Stream.repeatedly(fn -> 1 end) |> Stream.take(1000)

#Stream<[

enum: #Function<53.48559900/2 in Stream.repeatedly/1>,

funs: [#Function<58.48559900/1 in Stream.take_after_guards/2>]

]>

This is a relatively silly stream that just emits 1. It’s still a stream though in that, at the moment, it hasn’t emitted any values.

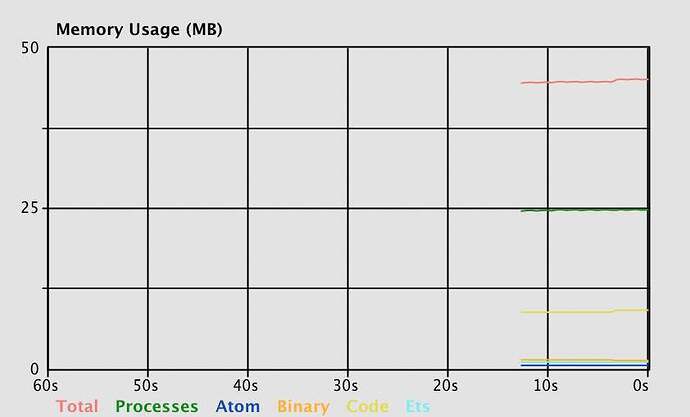

Now, suppose we pass this to |> Enum.count. We know what the answer will be of course (1000), but here is the key thing I want to emphasize: While Enum.count does iterate through all items in the stream to build its value, it doesn’t turn it into a list first, and then count that list. We can see this if we change the numbers to be really big, and then graph the memory.

If we take the stream and make it a list, then count the list, we see a spike in memory:

Stream.repeatedly(fn -> 1 end) |> Stream.take(1_000_000) |> Enum.to_list |> Enum.count

But if we just pass it into |> Enum.count, there is no spike in memory, because it’s only grabbing one item at a time, counting it, then throwing it away.

iex(2)> Stream.repeatedly(fn -> 1 end) |> Stream.take(1_000_000) |> Enum.count

1000000

Enum.reduce_while

Now, addressing the function you actually asked about, Enum.reduce_while and really all of the Enum functions work the way Enum.count does. They only use more memory if the output of the function needs to hold onto the whole collection. So if your reduce function holds on to the whole collection in the accumulator, it’ll use a lot of memory, and if you don’t, it won’t. Even if a Stream.reduce_while existed it would have the same memory characteristics because only you control the reduce function.