@Exadra37, fair points, I let myself get sloppy being in a rush. To be clear, my question is not “how do I fix this?” so much as “why would this be the case?” We have a workaround, but I would like to better understand why this behavior surfaces, and what are the trade-offs of projects using configuration this way.

All that said, here’s a more in-depth coverage of the situation.

I would prefer to be able to configure the application using runtime.exs and having my App.Repo configuration to look as:

config :app, App.Repo,

url: env!("DATABASE_URL", :string!),

username: env!("DATABASE_USERNAME", :string!, nil),

password: env!("DATABASE_PASSWORD", :string!, nil),

hostname: env!("DATABASE_HOSTNAME", :string!, nil),

stacktrace: env!("DATABASE_STACKTRACE", :boolean?, false),

show_sensitive_data_on_connection_error: env!("DATABASE_SHOW_SENSITIVE", :boolean?, false),

pool_size: env!("DATABASE_POOL_SIZE", :integer!, 10),

pool: env!("DATABASE_POOL_MODULE", :module?),

database:

"#{env!("DATABASE_DATABASE", :string!, nil)}#{env!("MIX_TEST_PARTITION", :string?, nil)}"

What I would prefer to avoid is having to do conditional configuration of the Repo where I leave out entries. I had assumed that there was no semantic different between supplying a key with a value of nil vs not supplying the key at all. This appears to work with any number of different keys, but not with the pool entry.

I tried to track down why, and it’s a bit rough trying to locate exactly how and where the configuration is being read. I ended up finding a lookup function that is pulling data out of ets here.

The result of this is the semantics of “if the value is set, then it is probably the correct type” which feels like a weird assumption to make. I’m wondering if there’s a reason that I’m not thinking of that it might be preferred?

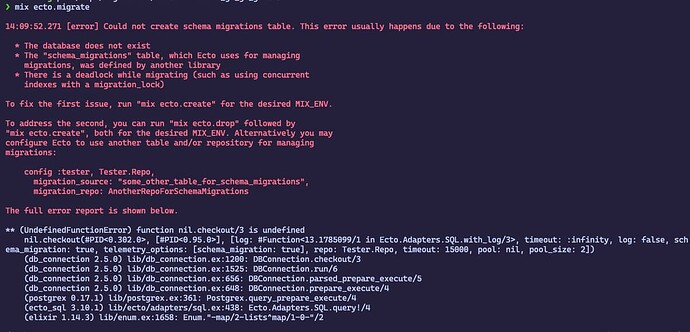

The other issue is that, at least for the minimal re-creation, the error message is pretty off:

07:42:55.708 [error] Could not create schema migrations table. This error usually happens due to the following:

* The database does not exist

* The "schema_migrations" table, which Ecto uses for managing

migrations, was defined by another library

* There is a deadlock while migrating (such as using concurrent

indexes with a migration_lock)

To fix the first issue, run "mix ecto.create" for the desired MIX_ENV.

To address the second, you can run "mix ecto.drop" followed by

"mix ecto.create", both for the desired MIX_ENV. Alternatively you may

configure Ecto to use another table and/or repository for managing

migrations:

config :show_me, ShowMe.Repo,

migration_source: "some_other_table_for_schema_migrations",

migration_repo: AnotherRepoForSchemaMigrations

The full error report is shown below.

** (UndefinedFunctionError) function nil.checkout/3 is undefined

nil.checkout(#PID<0.302.0>, [#PID<0.95.0>], [log: #Function<13.1785099/1 in Ecto.Adapters.SQL.with_log/3>, timeout: :infinity, log: false, schema_migration: true, telemetry_options: [schema_migration: true], repo: ShowMe.Repo, timeout: 15000, pool: nil, pool_size: 2])

(db_connection 2.5.0) lib/db_connection.ex:1200: DBConnection.checkout/3

(db_connection 2.5.0) lib/db_connection.ex:1525: DBConnection.run/6

(db_connection 2.5.0) lib/db_connection.ex:656: DBConnection.parsed_prepare_execute/5

(db_connection 2.5.0) lib/db_connection.ex:648: DBConnection.prepare_execute/4

(postgrex 0.17.1) lib/postgrex.ex:361: Postgrex.query_prepare_execute/4

(ecto_sql 3.10.1) lib/ecto/adapters/sql.ex:438: Ecto.Adapters.SQL.query!/4

(elixir 1.14.3) lib/enum.ex:1658: Enum."-map/2-lists^map/1-0-"/2

This is after doing the following:

mix phx.new show_me

cd show_me

# modify the config/dev.exs for ShowMe.Repo to have

# pool: nil

mix ecto.create

mix ecto.migrate

The stack trace makes this pretty clear, what’s happening, to be fair. Though the initial message can be quite confusing to people who are not as keen on reading stack traces when they’re given what appears to be a “blessed” explanation.

All this is to say, there are two obvious ways around this:

- You can conditionally configure based on the runtime environment(s).

- You can supply the same default to the configuration as it would supply.

In general, I would prefer option 2, but sorting out what that value ought to be can be somewhat challenging if the documentation is dense. It would be really preferable to be able to simply let a nil get through and expect that it behaves as I would have expected.