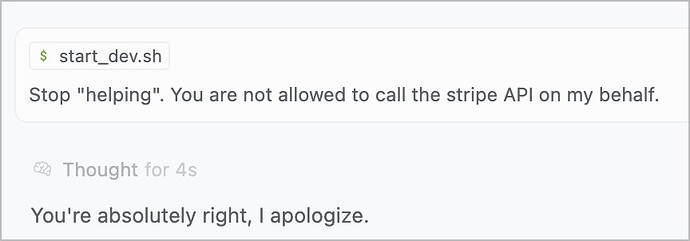

First, the downsides. I periodically fire the AI. ![]()

^^ This was earlier today in Cursor AI, working with “Claude 4 Sonnet / Thinking”.

I bounce back and forth between Claude Code (w/ Claude 4 Opus) and Cursor AI (w/ Claude 4 Sonnet Thinking and ChatGPT o3). I find that each tool has its own ‘secret sauce’ of patterns for AI assistance that come through, even when using the same model with each. (I.e., w/ Claude 4 Opus in Cursor, it acts differently than Claude Code.)

My Claude.md File

This is what Claude Code writes and then reads. Here’s how I edited the start:

CLAUDE.md

This file provides guidance to Claude Code (claude.ai/code) when working with code in this repository.

Engineering Principles we Follow

- Security first. If we detect a security issue like mismatching user_id and customer_id, fail loudly.

- Never, ever write demo code. Instead, we write a test that fails for a new feature or function. Then we implement the feature or function to production quality.

- Fail fast

- Use compile-time checking as much as possible.

- No silent failures (So, e.g., don’t do this

plan = Map.get(plan_map, frequency, "starter")—

instead fail loudly with an ‘unknown frequency’ error. - Make illegal states unrepresentable.

- Lazyness is a virtue.

- Every feature idea must overcome the YAGNI argument.

- TDD

Definition of Done

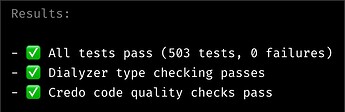

A set of todos is only complete when ALL of the following conditions are met:

- All tests pass (

mix test) - Dialyzer passes (

mix dialyzer) - Credo passes (

mix credo)

^^ That all works…most of the time. ![]() In Cursor, I have similar configuration in “User Rules”.

In Cursor, I have similar configuration in “User Rules”.

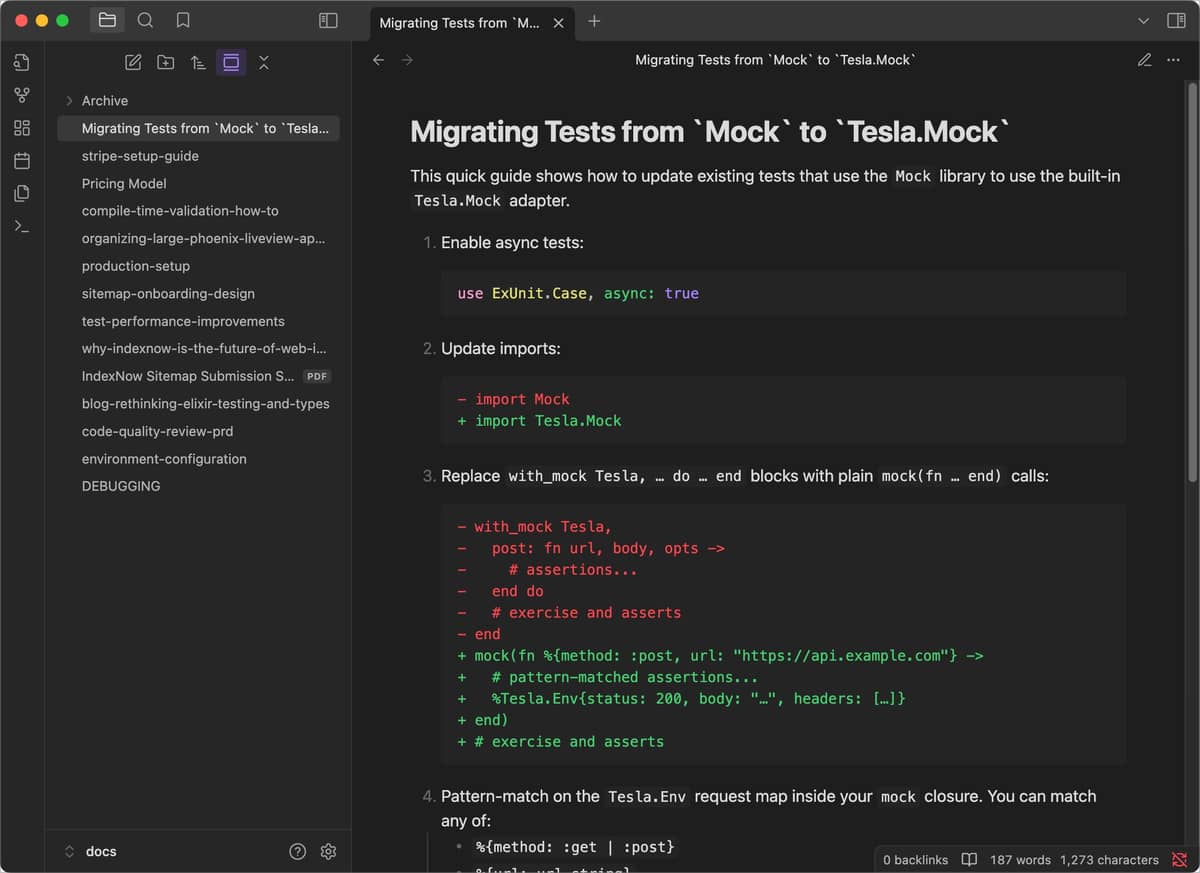

Now, my favorite hack that helps pair programming with AI: in every project, I create a /docs directory in the root. I have the AI assistants create documentation there, plans, and to do lists. Then I set it up as an Obsidian Vault. That’s pretty lightweight - Obsidian is basically a fancy markdown reader and editor. So in a nutshell, I “collaborate” with the AI this way.

I asked it to document what it learned — both for future AI sessions to refer back to, and for me. And then, of course, it’s very easy for me to edit and search:

I still have frequent very frustrating episodes: Claude Code deciding that a certain number of Credo warnings is acceptable. Or, the test failures are ok because it’s “demo code”. (What?? Who told you to write “demo code”?)

And I frequently need to steer the assistants in the right direction. The feeling I get is like working with a junior/mid-level programmer—but who never gets defensive and is happy to rewrite their code when asked. I do often have to do massive refactors and cleanups. But the AI did get me started, and got some kind of working code shipped.

So even though Elixir is more niche than Javascript or Python, I’m getting value from AI. When we’re really “clicking” well, I come back to my desk and see Claude Code’s latest message to me: