While not as prevalent as in imperative languages, arrays (collections with efficient random element access) are still very useful in Elixir for certain situations. However, so far a stable and idiomatic array library was still missing, meaning that people often had to resort to directly using Erlang’s not-so-idiomatic :array module.

Arrays aims to be this stable, efficient and idiomatic array library.

Arrays

Arrays is a library to work with well-structured Arrays with fast random-element-access for Elixir, offering a common interface with multiple implementations with varying performance guarantees that can be switched in your configuration.

Installation

Arrays is available in Hex and can be installed

by adding arrays to your list of dependencies in mix.exs:

def deps do

[

{:arrays, "~> 2.0"}

]

end

Documentation can be found at https://hexdocs.pm/arrays.

Using Arrays

Some simple examples:

Constructing Arrays

By calling Arrays.new or Arrays.empty:

iex> Arrays.new(["Dvorak", "Tchaikovsky", "Bruch"])

#Arrays.Implementations.MapArray<["Dvorak", "Tchaikovsky", "Bruch"]>

iex> Arrays.new(["Dvorak", "Tchaikovsky", "Bruch"], implementation: Arrays.Implementations.ErlangArray)

#Arrays.Implementations.ErlangArray<["Dvorak", "Tchaikovsky", "Bruch"]>

By using Collectable:

iex> [1, 2, 3] |> Enum.into(Arrays.new())

#Arrays.Implementations.MapArray<[1, 2, 3]>

iex> for x <- 1..2, y <- 4..5, into: Arrays.new(), do: {x, y}

#Arrays.Implementations.MapArray<[{1, 4}, {1, 5}, {2, 4}, {2, 5}]>

Some common array operations:

- Indexing is fast.

- The full Access calls are supported,

- Variants of many common

Enum-like functions that keep the result an array (rather than turning it into a list), are available.

iex> words = Arrays.new(["the", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog"])

#Arrays.Implementations.MapArray<["the", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog"]>

iex> Arrays.size(words) # Runs in constant-time

9

iex> words[3] # Indexing is fast

"fox"

iex> words = put_in(words[2], "purple") # All of `Access` is supported

#Arrays.Implementations.MapArray<["the", "quick", "purple", "fox", "jumps", "over", "the", "lazy", "dog"]>

iex> # Common operations are available without having to turn the array back into a list (as `Enum` functions would do):

iex> Arrays.map(words, &String.upcase/1) # Map a function, keep result an array

#Arrays.Implementations.MapArray<["THE", "QUICK", "PURPLE", "FOX", "JUMPS", "OVER", "THE", "LAZY", "DOG"]>

iex> lengths = Arrays.map(words, &String.length/1)

#Arrays.Implementations.MapArray<[3, 5, 6, 3, 5, 4, 3, 4, 3]>

iex> Arrays.reduce(lengths, 0, &Kernel.+/2) # `reduce_right` is supported as well.

36

Concatenating arrays:

iex> Arrays.new([1, 2, 3]) |> Arrays.concat(Arrays.new([4, 5, 6]))

#Arrays.Implementations.MapArray<[1, 2, 3, 4, 5, 6]>

Slicing arrays:

iex> ints = Arrays.new(1..100)

iex> Arrays.slice(ints, 9..19)

#Arrays.Implementations.MapArray<[10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20]>

Rationale

Algorithms that use arrays can be used while abstracting away from the underlying representation.

Which array implementation/representation is actually used, can then later be configured/compared, to make a trade-off between ease-of-use and time/memory efficiency.

Arrays itself comes with two built-in implementations:

Arrays.Implementations.ErlangArraywraps the Erlang:arraymodule, allowing this time-tested implementation to be used with all common Elixir protocols and syntactic sugar.Arrays.Implementations.MapArrayis a simple implementation that uses a map with sequential integers as keys.

By default, the MapArray implementation is used when creating new array objects, but this can be configured by either changing the default in your whole application, or by passing an option to a specific invocation of new/0-2, or empty/0-1.

iex> words = Arrays.new(["the", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog"])

#Arrays.Implementations.MapArray<["the", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog"]>

Is it fast?

I’m proud to finally present a stable release of this library for you all. Work on Arrays started a few years back but was on the backburner because of other projects. Now, I finally had some time to get back to it.

The library is heavily documented, specced and (doc)tested.

I’m very eager to hear your feedback! ![]()

~Marten/Qqwy

.

. (List, Enum, Tuple, Integer…Array)

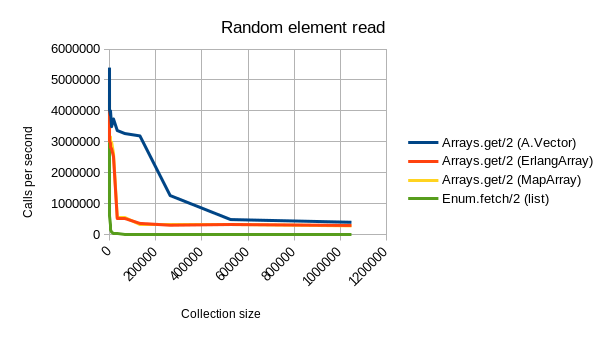

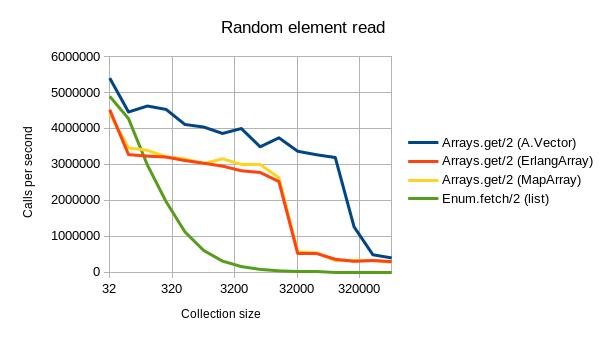

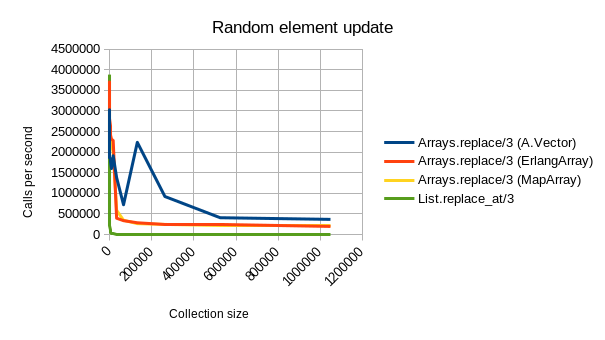

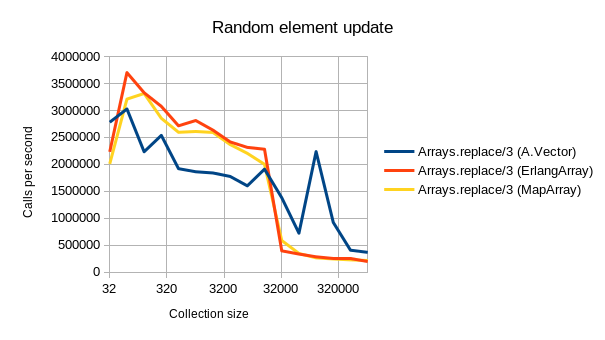

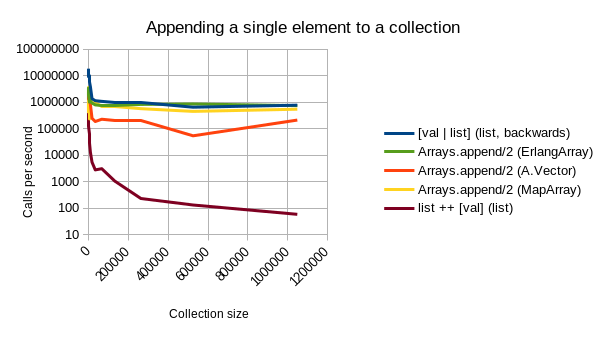

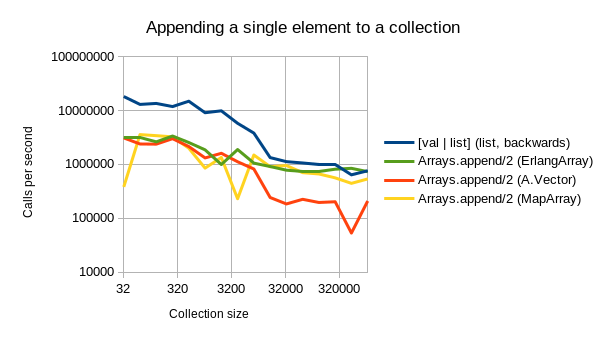

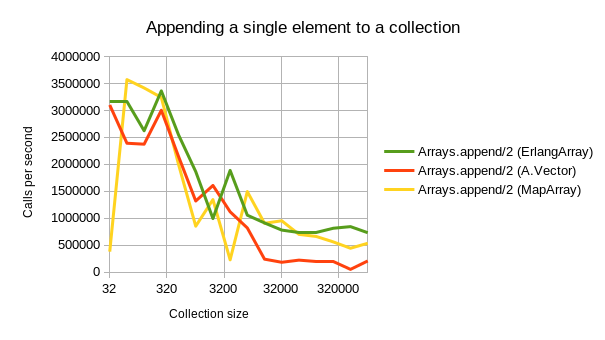

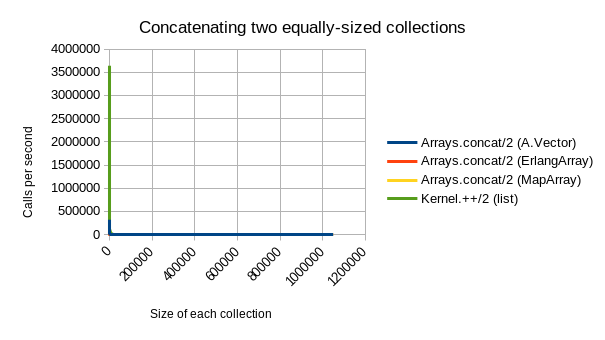

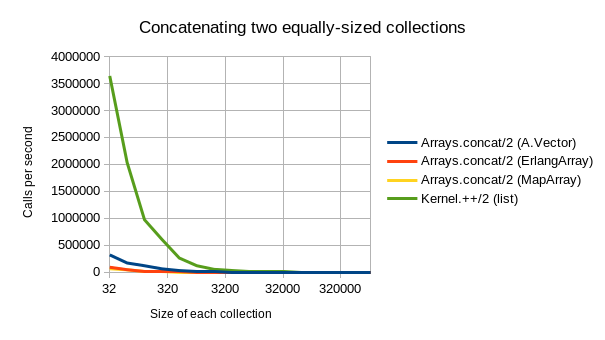

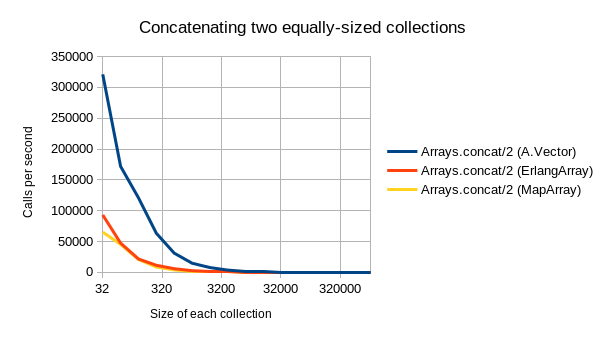

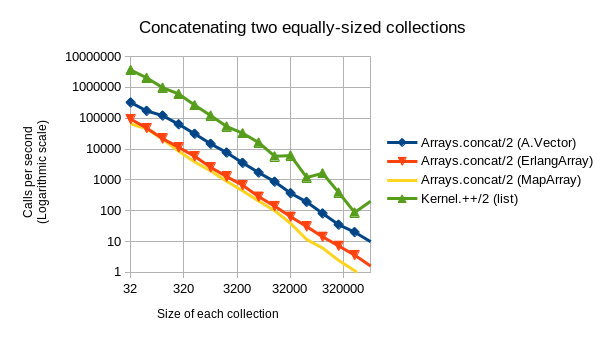

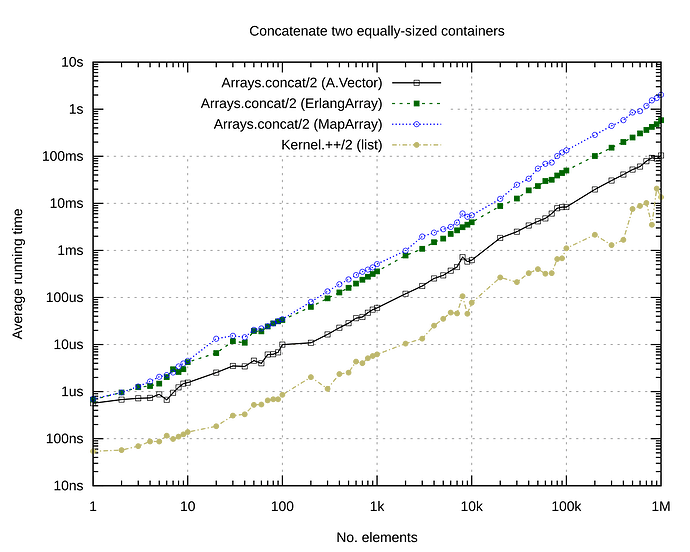

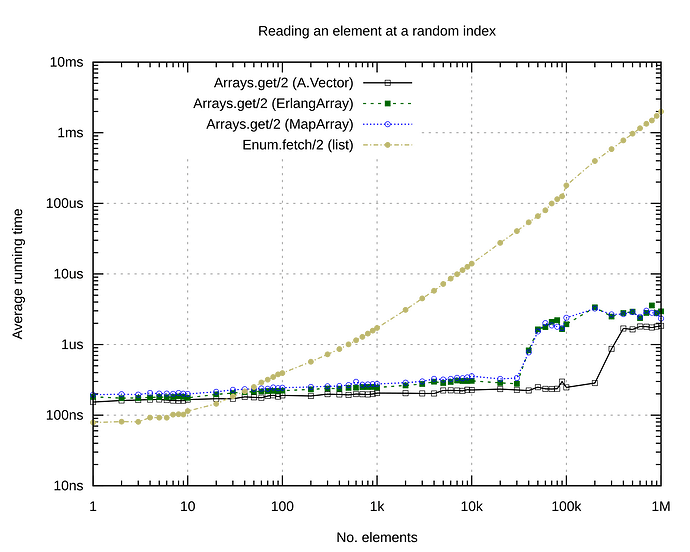

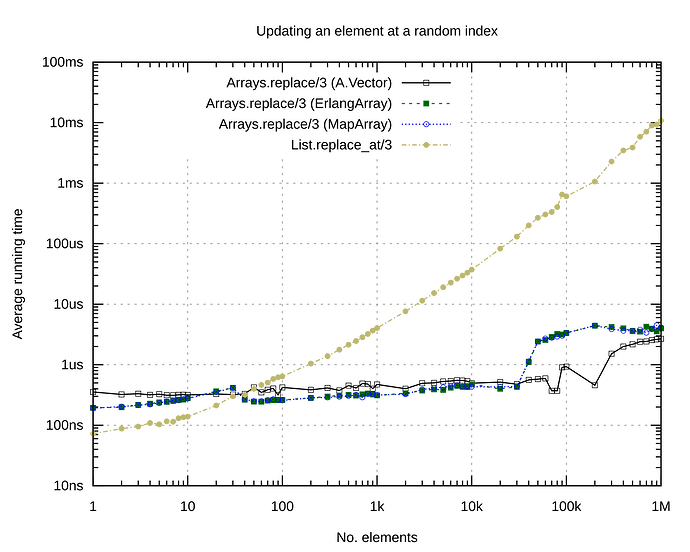

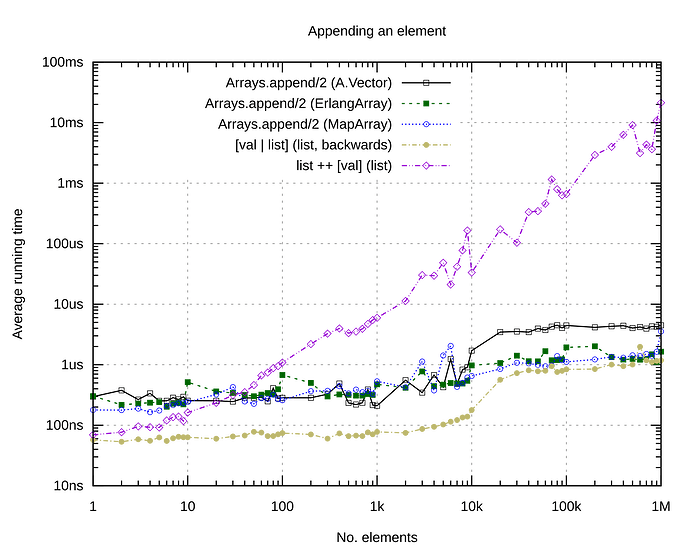

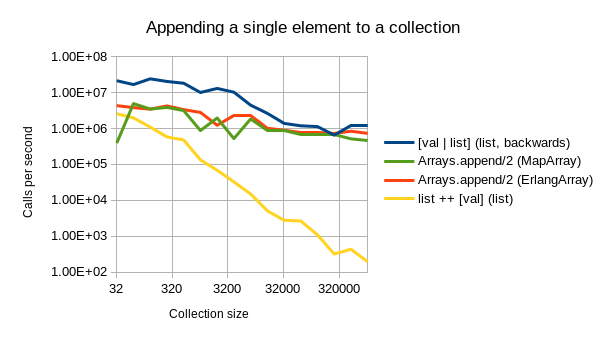

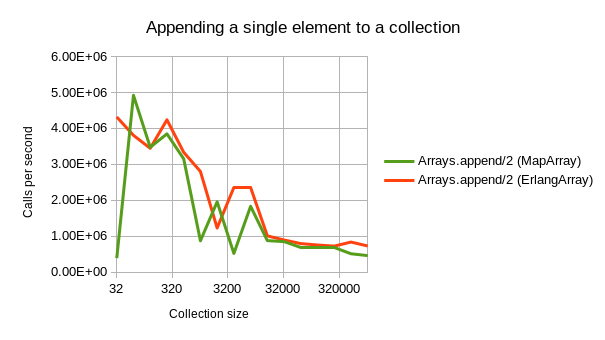

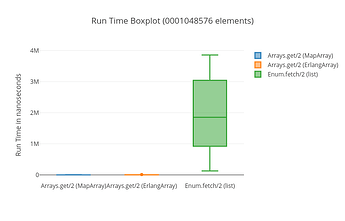

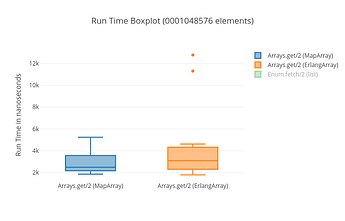

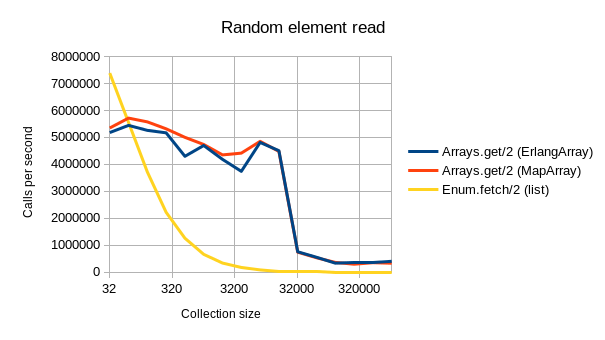

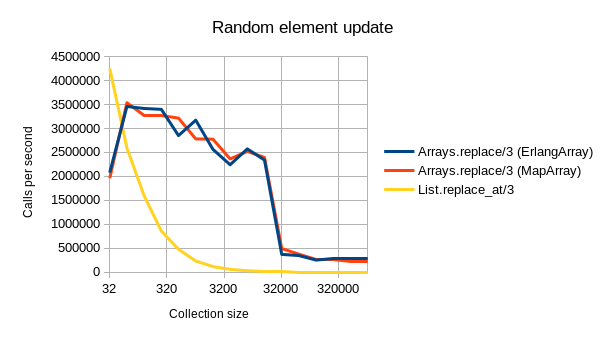

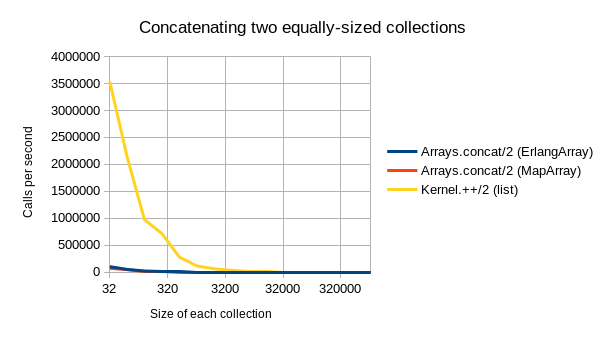

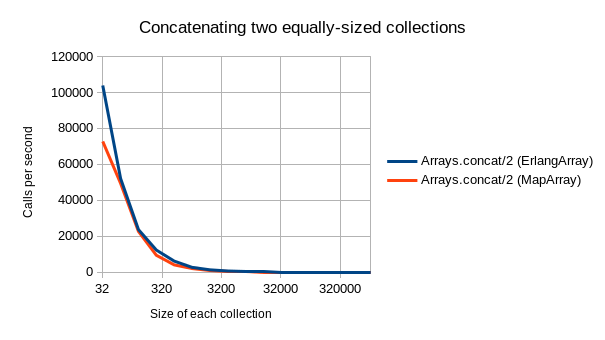

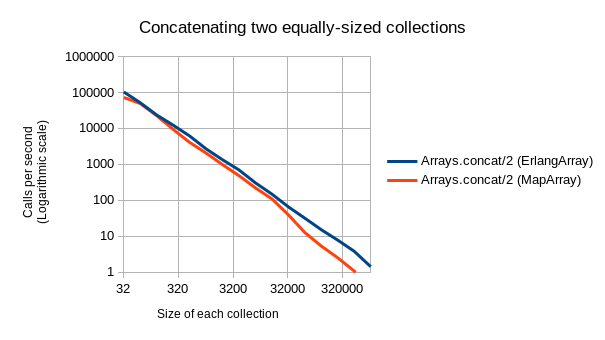

(List, Enum, Tuple, Integer…Array) . I’ve benchmarked the Rust-based implementation against the existing ErlangArray and MapArray ones, and it turns out that the overhead of the NIF-calls significantly overshadows the otherwise highly performant techniques used in this implementation. (Benchmark graphs

. I’ve benchmarked the Rust-based implementation against the existing ErlangArray and MapArray ones, and it turns out that the overhead of the NIF-calls significantly overshadows the otherwise highly performant techniques used in this implementation. (Benchmark graphs